As conversations increasingly transition to online platforms, the importance of maintaining a respectful digital space becomes paramount. This guide will walk you through the concept of a model designed for detecting inappropriate comments, distinguishing them from merely toxic comments. Let’s explore how you can utilize this model to improve conversations online.

Understanding the Concept of Inappropriateness

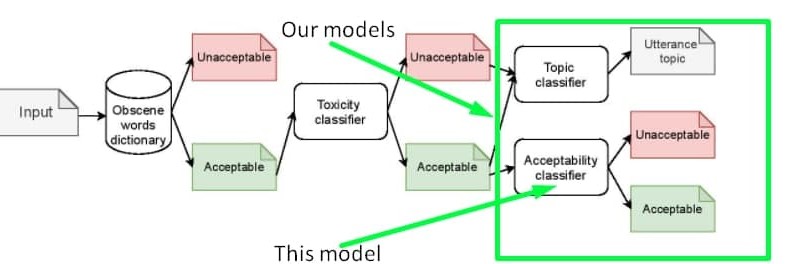

First, it’s vital to understand that the term **’inappropriateness’** in this context does not serve as a replacement for toxicity; it acts as a derivative. Think of toxicity as a broad category, while inappropriateness hones in on messages that lack obscenity but can still be harmful to the speaker’s reputation. This model can function as a second layer of filtering, to be applied after toxicity and obscenity assessments.

Proposed Usage

The model takes input from a specially curated dataset and analyzes comments utilizing a pipeline that resembles a complex conversation filter. By feeding relevant data, you could simultaneously train a classifier to detect both toxicity and inappropriateness. To assist you with data collection, feel free to access datasets on our GitHub or Kaggle.

Visualizing the Pipeline

Here’s how the proposed model pipeline operates:

Exploring Inappropriate Messages

The model primarily targets inappropriate messages in the Russian language. For instance, while someone may not use offensive language, their message can still be controversial or damaging. The model captures such nuances to classify messages accurately. Here are some examples that could help clarify:

- Example 1: “Ладно бы видного деятеля завалили а тут какого то ноунейм нигру преступника” – marked as inappropriate due to justifying harm.

- Example 2: “Это нарушение УКРФ!” – a non-inappropriate statement condemning a law violation.

Model Metrics

After training and validating the model, it achieved impressive metrics, demonstrating its efficacy:

- Accuracy: 0.89

- Precision: 0.92 for non-inappropriate messages

- F1-Score: 0.93 for non-inappropriate messages

- Macro Average: 0.86

Troubleshooting Tips

As you develop your model, you may face some challenges:

- Ensure your dataset is diverse and large enough. Small datasets can lead to inaccuracies.

- If you encounter inconsistent classifications, review and validate your training data for biases.

- When in doubt, consult the resources available on our GitHub or reach out directly through our community.

- For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.