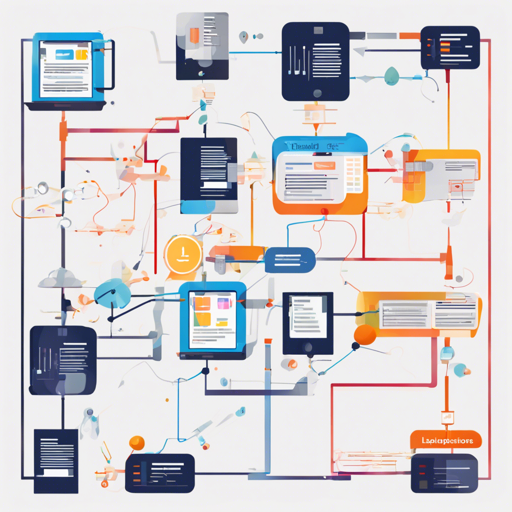

Welcome to the exciting world of LLM Flows! In this guide, we’ll walk through the process of building applications that leverage Large Language Models (LLMs). Whether you want to create a chatbot, a Q&A system, or a more complex application, LLM Flows provides a simple and transparent framework to help you achieve your goals.

What is LLM Flows?

LLM Flows is a framework specifically designed for building applications using LLMs like OpenAI’s models. It emphasizes simplicity, explicitness, and transparency, making it easier to create well-structured applications without hidden prompts or complicated calls.

Installation

To get started with LLM Flows, you’ll first need to install it. Simply run the following command in your terminal:

pip install llmflowsCore Concepts

Before diving into code, let’s break down a couple of core concepts using an analogy. Think of LLM Flows like a recipe for cooking a delicious dish:

- LLMs: These are your cooking ingredients. Just like you would choose your ingredients based on the dish you want to prepare, in LLM Flows, you’ll select the right model for your application.

- Prompt Templates: Imagine these as the cooking instructions. They guide how to mix the ingredients and in what order, ensuring your dish turns out just right.

- Flow and FlowSteps: Think of these as the different stages of cooking. First, you prepare the ingredients (input), then you cook them following the instructions (processing), and finally, you serve the dish (output).

Getting Started with Code

Here’s how you can create your first LLM application with LLM Flows:

1. Set Up Your LLM

To start using an LLM, you first need to create an instance of it, for example, using OpenAI:

from llmflows.llms import OpenAI

llm = OpenAI(api_key="your-openai-api-key")

result, call_data, model_config = llm.generate(prompt="Generate a cool title for an 80s rock song")2. Create Prompt Templates

You can make your prompts dynamic by using the PromptTemplate class:

from llmflows.prompts import PromptTemplate

prompt_template = PromptTemplate(prompt="Generate a title for a 90s hip-hop song about {topic}.")

llm_prompt = prompt_template.get_prompt(topic="friendship")

song_title = llm.generate(llm_prompt)

print(song_title)3. Build a Simple Chatbot

To create a chatbot, you need to manage the conversation history:

from llmflows.llms import OpenAIChat, MessageHistory

llm = OpenAIChat(api_key="your-openai-api-key")

message_history = MessageHistory()

while True:

user_message = input("You: ")

message_history.add_user_message(user_message)

llm_response, call_data, model_config = llm.generate(message_history)

message_history.add_ai_message(llm_response)

print(f"LLM: {llm_response}")4. Creating Flows for Complex Applications

In complex applications, you can create flowsteps to manage dependencies between prompts and LLM calls. For example:

from llmflows.flows import Flow, FlowStep

# Define your flow steps here

# Connect them according to your application logic

soundtrack_flow = Flow(movie_title_flowstep)

results = soundtrack_flow.start(topic="friendship", verbose=True)Troubleshooting

If you encounter any obstacles while working with LLM Flows, consider the following troubleshooting tips:

- Double-check your API keys and ensure they are valid.

- Review the console output for any error messages that provide hints.

- Make sure you have correctly installed all dependencies.

- Consult the documentation for detailed usage instructions.

- For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that advancements like LLM Flows are crucial for the future of AI. They enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

More Information

For additional examples and further information, visit our documentation, and check out the examples folder for practical implementation examples.