Welcome to this comprehensive tutorial on building an image captioning model using the powerful PyTorch library! In this article, we will guide you through creating a model that generates descriptive captions based on provided images.

Objective

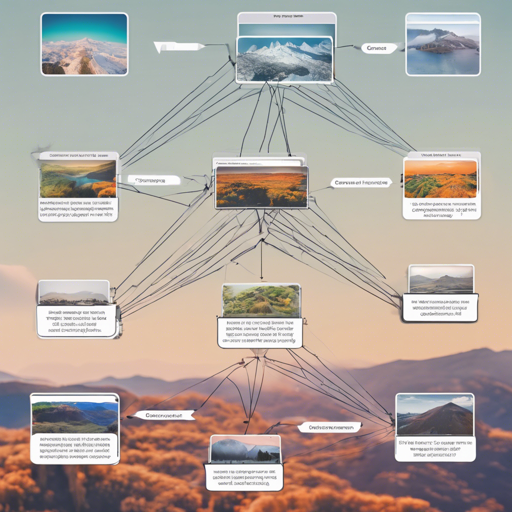

The aim is to construct a model that can generate descriptive captions for images. We will focus on implementing the Show, Attend, and Tell paper. This model taps into the visual content of images by using an attention mechanism that knows where to look when generating each part of the caption.

Understanding the Concepts

Before diving into the implementation, it’s essential to understand a few key concepts:

- Image Captioning: The task of generating textual descriptions for images.

- Encoder-Decoder Architecture: An architecture where an Encoder processes input data and compresses it into a fixed representation, while a Decoder generates an output sequence from it.

- Attention: A mechanism that allows the model to focus on specific parts of an input when producing an output, ensuring more relevant responses.

- Transfer Learning: Utilizing a pre-trained model to improve performance on a related task.

- Beam Search: A search algorithm to find the most optimal sequence of outputs instead of picking just the immediate best option.

Implementation Overview

This model consists of an Encoder that processes the image and a Decoder that generates captions. Here’s how they work using our analogy:

Imagine an art critic (the Decoder) who looks at a painting (the image processed by the Encoder) and gives a detailed description. The critic needs to focus on different parts of the painting to correctly comment on its elements (the Attention mechanism). For instance, when describing a scene with a tree and a boy, at one moment, the critic may focus on the tree’s leaves, and at another, on the boy’s actions. This back-and-forth gaze is what enables a comprehensive description.

Implementation Steps

Let’s break down the implementation into key segments:

1. Dataset Preparation

We are using the MSCOCO 14 Dataset. Download the training and validation images, along with the corresponding captions. The dataset needs to be organized into HDF5 and JSON files for efficient loading.

2. Model Architecture

We will utilize a pre-trained ResNet-101 as our Encoder and an LSTM as our Decoder.

3. Training the Model

Train the model using the training set and evaluate its performance on the validation set. We will employ Adam optimizer and CrossEntropyLoss during training.

4. Inference

To generate captions for new images, we will use the model to analyze the image and produce a caption step by step while focusing on different parts of the image using beam search.

Troubleshooting Ideas

If you encounter issues during implementation, consider these troubleshooting tips:

- Ensure all dependencies are installed correctly, especially PyTorch and torchvision.

- Check your image preprocessing steps, such as resizing and normalization, to conform with the model requirements.

- If you face errors related to tensor dimensions, carefully review your input shapes.

- For model performance issues, fine-tune the pre-trained Encoder for better results.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

By following these steps and understanding the key concepts, you should be well on your way to building an effective image captioning model with PyTorch. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.