In this blog we will explore how to build a Non-Safe For Work (NSFW) classifier using the PyTorch framework and leveraging the googlevit-base-patch16-224-in21k model from Hugging Face. This project focuses on image classification, aiming to detect images that may not be safe for work with high accuracy.

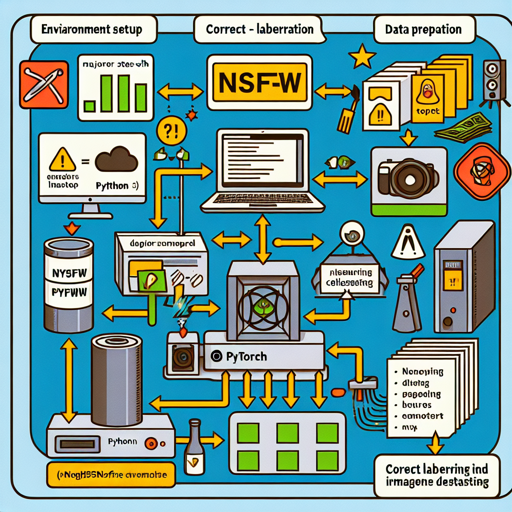

Step 1: Setting Up Your Environment

To jumpstart your NSFW classifier, ensure that you have Python and PyTorch installed in your development environment. You can get PyTorch from its official website based on your setup requirements.

Step 2: Data Preparation

You will need a dataset suitable for our classifier. For this project, we will use the ‘deepghsnsfw_detect’ dataset. Ensure your images are labeled correctly, as this will enhance your model’s accuracy. Here’s how you can prepare your data:

- Download the ‘deepghsnsfw_detect’ dataset.

- Organize the images into folders based on their labels (NSFW and Safe).

- Split your data into training and validation sets.

Step 3: Building the Classifier

Using the ‘googlevit-base-patch16-224-in21k’ model, you can build your classifier. This model serves as a pre-trained backbone that provides a great starting point for your image classification task. Think of it as a robust frame that you can decorate with unique features (your specific dataset).

Step 4: Training Your Model

Train your NSFW classifier using the prepared dataset. Here’s an overview of how the training process works:

class NSFWClassifier(nn.Module):

def __init__(self):

super(NSFWClassifier, self).__init__()

self.model = vit_base_patch16_224_in21k(pretrained=True)

def forward(self, x):

return self.model(x)

# Setup Training Loop...

Here’s an analogy: Imagine you’re in a music competition where the judges expect your unique style. You’ve got a basic melody (the pre-trained model), but you need to add your notes (your training data) to create a remarkable performance (a well-functioning classifier).

Step 5: Evaluating Your Model

Once trained, it’s crucial to evaluate your model using metrics like accuracy. After running your evaluation, you should see an accuracy close to 92%. This indicates that your model is performing well in classifying NSFW content.

Troubleshooting Tips

Here are some common issues you might encounter and how to resolve them:

- Low Accuracy: If your accuracy is not meeting expectations, consider adding more data to your training set or fine-tuning your model parameters.

- Training Crashes: Ensure your system requirements (like GPU/CPU power) are sufficient for the model you are using.

- Import Errors: Confirm that you have all necessary libraries installed and that there are no version mismatches.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.