In the ever-evolving world of artificial intelligence, tools and methodologies are being crafted to bolster machine learning models. One exciting advancement is DIVA, a post-training approach designed to enhance the visual capabilities of CLIP models. This blog will guide you through understanding and implementing DIVA to improve CLIP’s performance on various visual comprehension tasks.

What is DIVA?

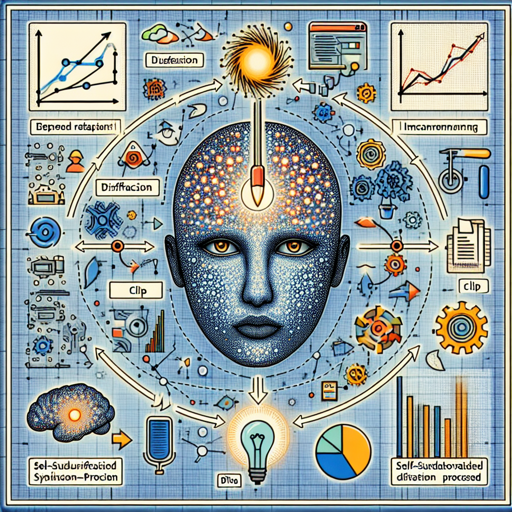

DIVA stands for DIffusion model as a Visual Assistant for CLIP. This innovative approach leverages the power of diffusion models to optimize CLIP representations through a self-supervised diffusion process. Using only images without corresponding text, DIVA provides generative feedback from text-to-image diffusion models to mitigate CLIP’s visual shortcomings.

Why Use DIVA?

DIVA has demonstrated remarkable improvements on the challenging MMVP-VLM benchmark, showing an increase of 3-7% in performance. Additionally, it enhances the functionality of Multimodal Language and Vision Models on tasks requiring deep visual understanding and segmentation.

Setting Up DIVA for CLIP: A Step-by-Step Guide

Follow these steps to implement DIVA and enhance your CLIP model.

Step 1: Download DIVA Code

- Visit the official GitHub repository: DIVA GitHub Repository.

- Clone the repository to your local machine.

Step 2: Prepare Your CLIP Model

Ensure you have a compatible CLIP model. Recommended models for DIVA include:

- OpenAI ViT-L-14 224²

- OpenAI ViT-L-14 336²

- MetaCLIP ViT-L-14 224²

- DFN ViT-H-14 224²

Step 3: Run DIVA

Once you have the code and model ready, follow the implementation instructions provided in the repository. DIVA will leverage a self-supervised diffusion process to optimize the representations of your CLIP model.

Understanding the Code: A Culinary Analogy

Think of using DIVA like preparing a gourmet dish. The CLIP model is your primary recipe, while DIVA complements it by offering spices and ingredients that enhance flavors (representations). However, just as a dish requires precise measurements and a careful cooking process, DIVA optimally enhances the CLIP model by adjusting the balance of generative feedback it receives. This harmonizes the final output, resulting in an improved visual comprehension capability.

Troubleshooting Common Issues

If you encounter challenges while using DIVA, consider the following troubleshooting tips:

- Installation Errors: Ensure all dependencies are installed as indicated in the repository documentation. A missing library can halt the process.

- Performance Isn’t Improving: Double-check your input images and ensure they align with the model’s requirements. Sometimes the quality of input can influence output significantly.

- Incompatibility Issues: If there are version discrepancies, refer to the repository for compatible versions or check for updates.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

DIVA represents a pivotal advancement in enhancing the visual processing capabilities of CLIP models. By utilizing diffusion models to provide effective feedback, users can witness substantial improvements in visual tasks. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.