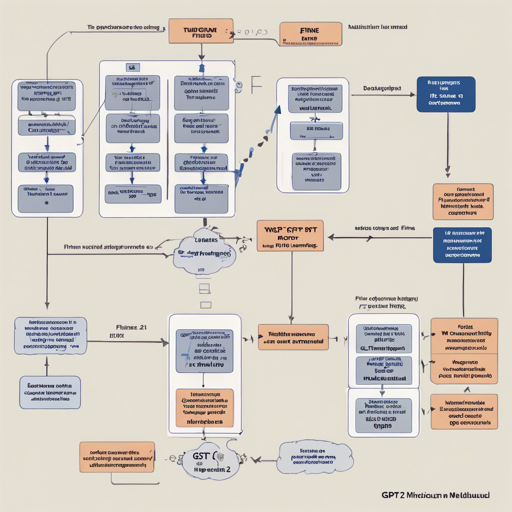

Fine-tuning a pre-trained model can elevate your AI application to new heights. In this article, we’ll walk you through the process of fine-tuning the GPT-2 Medium model using the MultiWOZ21 dataset. If you’ve been curious about enhancing your natural language generation capabilities, you’ve landed on the right page!

Understanding the Basics

Before diving into the nitty-gritty, let’s first get familiar with the building blocks you’ll be working with:

- GPT-2 Medium: A popular transformer-based language model designed for natural language understanding and generation.

- MultiWOZ21: A dataset that consists of dialogues, which is crucial for training conversational agents.

Getting Started

To fine-tune the model, you’ll need to follow a structured training procedure. Here’s a quick overview of the training hyperparameters that you’ll use:

learning_rate: 5e-5

train_batch_size: 64

gradient_accumulation_steps: 2

total_train_batch_size: 128

optimizer: AdamW

lr_scheduler_type: linear

num_epochs: 20Hyperparameters Explained

Think of training hyperparameters as the ingredients in a recipe. Just as the right balance of flour, sugar, and eggs leads to a delicious cake, the right setting of hyperparameters will ensure that your model learns efficiently. Here’s a breakdown:

- Learning Rate: This is like the speed limit for your model. A higher rate means faster learning but risks missing the right answer.

- Train Batch Size: Each batch is a mini-experience for the model to learn from—similar to how one might learn in small groups.

- Gradient Accumulation Steps: This refers to how many times your model will accumulate gradients before updating weights. It’s like allowing more memory to gather ideas before making a decision.

- Optimizer: AdamW is one of the methods to adjust the model’s parameters effectively; think of it as a guide on how best to move through the vast world of knowledge.

- Learning Rate Scheduler Type: A linear scheduler adjusts the learning rate over time, much like a good coach gradually increasing the intensity of training.

- Num Epochs: This represents the number of times the entire data set is passed through the model—a bit like reading the same book multiple times to better understand it.

Required Framework Versions

To ensure a smooth training process, have the following versions of your frameworks ready:

- Transformers: 4.23.1

- Pytorch: 1.10.1+cu111

Troubleshooting Common Issues

If you encounter any issues during the fine-tuning process, here are some troubleshooting ideas:

- Training Crashes: Ensure you have enough GPU memory. You might need to reduce the batch size.

- Model Not Converging: Double-check your learning rate. A value too high or too low can prevent proper learning.

- Training Too Slow: Consider optimizing your data pipeline or reducing the number of accumulated gradients.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Wrap Up

Fine-tuning the GPT-2 Medium on the MultiWOZ21 dataset can significantly enhance your conversational AI capabilities. By carefully adjusting hyperparameters and using the right frameworks, you’re on your way to creating a model that understands and generates human-like dialogue.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.