Are you ready to take your machine learning skills to the next level? In this article, we will delve into the fascinating world of fine-tuning the unslothMistral-Nemo-Instruct-2407 model. With an emphasis on user-friendliness, we’ll guide you through all the critical steps, leveraging insights from the training process.

Understanding the Model Architecture

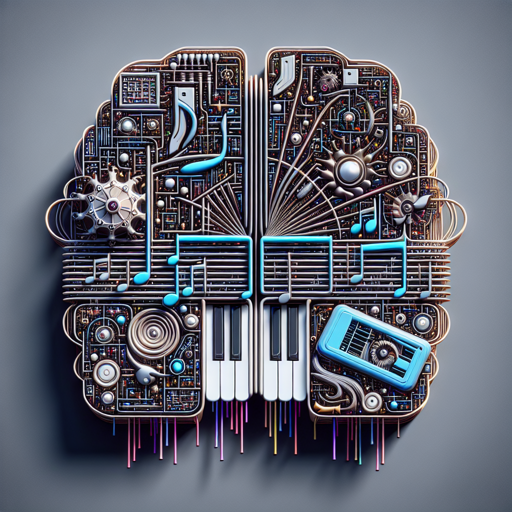

Fine-tuning a model can seem like trying to tune a symphony orchestra; each instrument needs to be perfectly aligned for the entire composition to flow harmoniously. The unslothMistral-Nemo-Instruct-2407 is like a well-crafted musical score built upon a robust foundation, incorporating various components, such as:

- Pre-trained Model: The model builds on the characteristics of previous training, which enhances its ability to generate human-like text.

- Curated Dataset: The fine-tuning process utilized a dataset of 10,801 human-generated conversations, akin to having a library of diverse stories that help the model learn different perspectives.

- Efficient Training: Using the Unsloth library, the training process was expedited, enabling faster and more efficient fine-tuning.

Fine-Tuning Process

The following steps will guide you through the fine-tuning process:

model = FastLanguageModel.get_peft_model(

model,

r = 256,

target_modules = [q_proj, k_proj, v_proj, o_proj, gate_proj, up_proj, down_proj],

lora_alpha = 32,

lora_dropout = 0,

bias = 'none',

use_gradient_checkpointing = unsloth,

random_state = 3407,

use_rslora = True,

)Imagine cooking a gourmet meal: you start by selecting quality ingredients (your model) and determine how to season them (the parameters). The code above makes essential adjustments to the model for fine-tuning:

- Target Modules: This selects specific parts of the model that you wish to train with the new dataset.

- LoRA Parameters: These settings help in efficiently adjusting the modeled behavior, determining how the model handles various information.

Training the Model

The training phase is where your ingredients come together, cooking to perfection! Here’s a breakdown of the training settings:

trainer = SFTTrainer(

model = model,

tokenizer = tokenizer,

train_dataset = train_ds,

compute_metrics = compute_metrics,

args = TrainingArguments(

per_device_train_batch_size = 2,

num_train_epochs = 2,

learning_rate = 8e-5,

optim = 'adamw_8bit',

output_dir = outputs,

),

)Just like a chef must monitor the cooking time, you’ll need to keep an eye on these training parameters to avoid overcooking your model. Key parameters include:

- Learning Rate: This determines how quickly the model learns. Too high might burn the dish, while too low might under-cook it.

- Epochs: This defines how many times the model will pass through the training data – ensuring it absorbs all flavors of the dataset.

Troubleshooting Common Issues

As with any recipe, things might not always go as planned. Here are some troubleshooting steps to navigate potential hurdles:

- Insufficient VRAM: If you encounter VRAM issues, consider reducing your batch size or using gradient checkpointing to save memory.

- Underperformance: Review your dataset quality. The training data should be diverse and relevant to the task at hand.

- Add logging statements in your code to identify specific bottlenecks or performance issues.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Embarking on the journey of fine-tuning the unslothMistral-Nemo-Instruct-2407 model can dramatically enhance your AI development capabilities. The knowledge gained through this process is not just about coding; it’s about understanding the nuances that drive models and how to manipulate them effectively.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.