Welcome to the world of Apache TVM, a powerful compiler stack designed to bridge the gap between performance-oriented hardware and productivity-focused deep learning frameworks. In this guide, we’ll walk through the steps to get started with TVM, explore its capabilities, and discuss troubleshooting strategies to help you along the way.

What is Apache TVM?

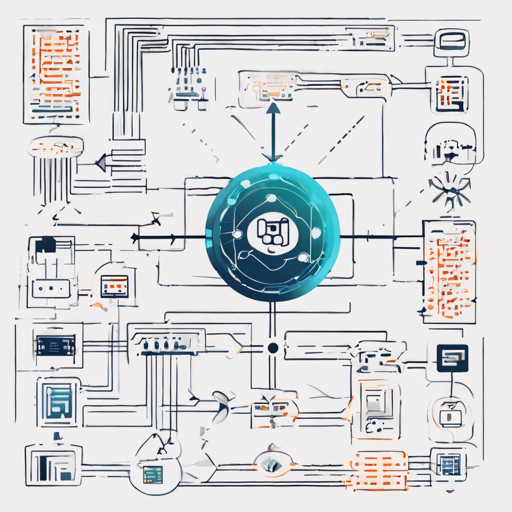

Apache TVM is an open-source stack that facilitates the compilation of deep learning models. It effectively translates high-level model representations into optimized machine code that runs efficiently on a variety of backends. Think of it as a translator working tirelessly to ensure that your intricate deep learning plans are seamlessly executed on hardware.

Getting Started with TVM

To embark on your journey with Apache TVM, follow these steps:

- Installation: Begin by visiting the TVM Documentation site where you’ll find comprehensive installation instructions.

- Tutorials: A great way to familiarize yourself with TVM is to follow the Getting Started with TVM tutorial. It will introduce you to the essentials of working with the compiler.

Understanding the Code: The Art of Compilation

TVM operates like a master chef in a bustling kitchen. Imagine you provide a chef with a complex dish (your deep learning model) that needs to be prepared using specific ingredients (the hardware). The chef’s role is to take your recipe, optimize the preparation process, and deliver a sumptuous meal ready for the customers (the execution environment). This is precisely what TVM does—converting your deep learning models into highly optimized code capable of running efficiently on various hardware backends.

Troubleshooting Tips

Even the best chefs encounter issues in the kitchen. Here are some troubleshooting ideas you might find helpful when using Apache TVM:

- Installation Errors: Ensure that you have followed the installation instructions correctly. Check for any missing dependencies that could hinder setup.

- Performance Issues: If your models aren’t running as fast as expected, revisit your compilation settings. In some cases, tweaking optimization flags can significantly enhance performance.

- Documentation Confusion: If any part of the documentation is unclear, do not hesitate to refer to the TVM Community for support.

- Building Problems: If you encounter build issues, make sure you are using the correct versions of dependencies and have followed the build instructions accurately.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Acknowledgements

TVM stands on the shoulders of giants. Many invaluable lessons were learned from projects like:

- Halide – Insights were adapted particularly in the TIR and arithmetic simplification modules.

- Loopy – The use of integer set analysis influenced the loop transformation primitives.

- Theano – This project inspired the design of the symbolic scan operator for recurrence.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Now you’re ready to dive into the expansive world of Apache TVM! With the right resources and a bit of practice, you’ll master this deep learning compiler stack in no time!