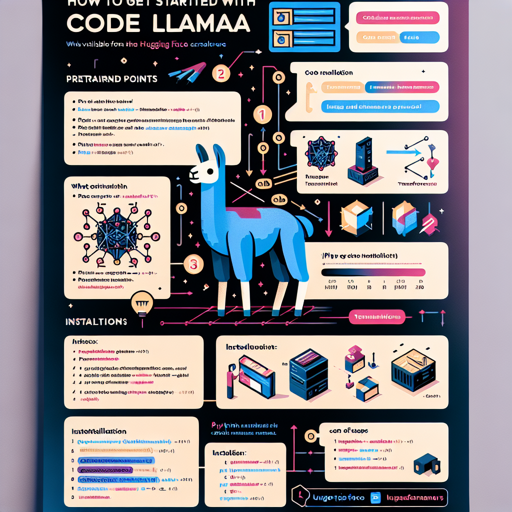

Code Llama is an impressive collection of pretrained and fine-tuned generative text models specialized for code synthesis and understanding. In this article, we will guide you through the process of utilizing the Code Llama model, focusing particularly on the 13B version available in the Hugging Face Transformers format.

What You Need

- Python installed on your machine.

- Access to the internet to download the model.

- The transformers library from Hugging Face.

Installation Steps

To make Code Llama work for you, follow these simple instructions:

- Open your terminal or command prompt.

- Run the following command to install the necessary libraries:

pip install transformers accelerateUsing the Code Llama Model

Once the libraries are installed, it’s time to dive into the exciting world of Code Llama. Here is a snippet of code that can help you start generating code:

from transformers import AutoTokenizer

import transformers

import torch

model = "codellama/CodeLlama-13b-hf"

tokenizer = AutoTokenizer.from_pretrained(model)

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto",

)

sequences = pipeline(

"import socket\ndef ping_exponential_backoff(host: str):",

do_sample=True,

top_k=10,

temperature=0.1,

top_p=0.95,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

max_length=200,

)

for seq in sequences:

print(f"Result: {seq['generated_text']}")

Understanding the Code

Let’s break down the code with an analogy. Imagine the Code Llama model as a skilled chef in a massive kitchen. The chef has access to a plethora of ingredients (data) and tools (code libraries) to whip up delightful dishes (code solutions). Here’s how everything works together:

- Ingredients Preparation: Using

AutoTokenizer.from_pretrained(model), we are preparing our ingredients—interpreting the input text into a format the chef will understand. - Setting Up the Kitchen: The

pipelineis akin to setting up our cooking station—it gathers the chef and tools, ready to start creating. - Cooking Time: The

pipelinesection where we define our input (`import socket\ndef ping_exponential_backoff(host: str):`) is like giving the chef the recipe to follow and getting a unique dish (output sequence) with several flavors (variations) based on the ingredients and techniques used.

Troubleshooting

If you encounter any issues during your journey with Code Llama, consider the following troubleshooting ideas:

- Ensure that Python and pip are correctly installed and updated.

- Check that the transformers library is installed without any errors.

- Confirm you are using the correct model identifier and path.

- Adjust your system’s GPU settings if you face performance challenges.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Final Thoughts

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.