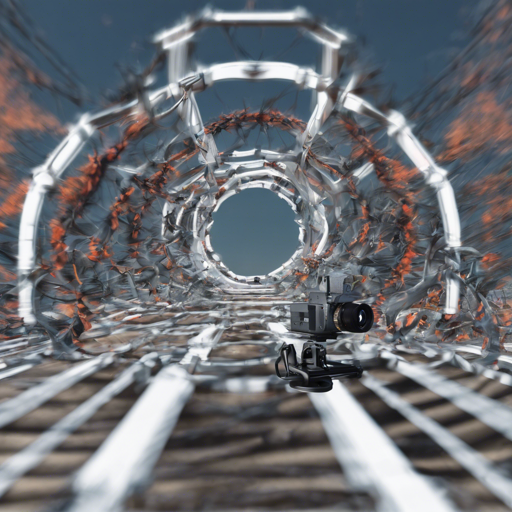

If you’re venturing into the exciting realm of Generative Camera Dolly (GCD) for extreme monocular dynamic novel view synthesis, you’re in for a treat! This guide will walk you through the setup process, utilization of pretrained models, inference, and more, along with some troubleshooting tips to ensure your experience is as smooth as possible.

Setup

Setting up the environment for GCD involves creating a virtual space and installing all necessary packages. Think of this process like preparing your workspace before painting a masterpiece: you need a clean canvas and the right tools. Here’s how to do it:

conda create -n gcd python=3.10

conda activate gcd

conda install pytorch torchvision torchaudio pytorch-cuda=12.1 -c pytorch -c nvidia

pip install git+https://github.com/OpenAI/CLIP.git

pip install git+https://github.com/Stability-AI/datapipelines.git

pip install -r requirements.txtFor the best results, utilize PyTorch version 2.0.1 or later.

Using Pretrained Models

To leverage the power of pretrained models, you’ll want to download models like **Kubric-4D** and **ParallelDomain-4D** as per your needs. These can be thought of as your shortcuts to creating stunning visuals, akin to having a well-crafted toolkit ready for your DIY project.

Here are some key checkpoints:

Inference with Gradio

Running inference on custom videos helps visualize your model’s performance. It’s like taking a test shot before the final production to see how everything looks and functions together. Here is how to execute it:

cd gcd-model

CUDA_VISIBLE_DEVICES=0 python scripts/gradio_app.py --port=7880 --config_path=configs/infer_kubric.yaml --model_path=..pretrained/kubric_gradual_max90.ckpt --output_path=..eval/gradio_output/default --examples_path=..eval/gradio_examples --task_desc="Arbitrary monocular dynamic view synthesis on Kubric scenes up to 90 degrees azimuth"Modify the command to use different models and datasets as desired.

Training Your Own Model

Training a model is akin to crafting a fine wine; it takes time and the right ingredients. If you want to create a custom application using GCD on your own dataset, follow the training instructions carefully.

cd gcd-model

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 python main.py --base=configs/train_kubric_max90.yaml --name=kb_v1 --seed=1234 --num_nodes=1 --wandb=0 model.base_learning_rate=2e-5 data.params.dset_root=pathto/Kubric-4Ddata data.params.batch_size=4Evaluation

Evaluating the performance of your model allows you to score its effectiveness—similar to getting feedback during a rehearsal. Adapt the evaluation commands as shown:

cd gcd-model

CUDA_VISIBLE_DEVICES=0,1 python scripts/test.py --gpus=0,1 --config_path=configs/infer_kubric.yaml --model_path=..logs*_kb_v1/checkpoints/epoch=00000-step=00010000.ckpt --input=..eval/list/kubric_test20.txt --output=..eval/output/kubric_mytest1Troubleshooting

If you encounter issues at any stage, don’t fret. Here are a few troubleshooting ideas:

- Ensure all paths are set correctly.

- Check that your GPU settings align with your training and inference commands.

- Verify that the necessary packages are installed and compatible with your version of Python.

- When in doubt, restart your kernel or environment.

For any persistent problems, make sure to open an issue on GitHub for assistance, and don’t hesitate to suggest possible fixes. For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.