Welcome to the fascinating world of voice gender recognition! This blog post will guide you through understanding how to build a machine learning model for identifying gender based on vocal characteristics. We’ll break down the complex concepts into user-friendly segments and provide troubleshooting tips to help you along the way.

Understanding the Concept

Imagine you’re a librarian in a vast library filled with books of various genres. Each book’s genre can be discerned from reading the title and a brief description. Similarly, our voice gender recognition model works by analyzing “voice samples” — the vocal expressions from different speakers. Just as a librarian learns to categorize books, our model learns to classify voices as either male or female based on certain vocal traits.

The Dataset

The first step in our journey is to gather the necessary data:

- Download the pre-processed dataset as a CSV file.

- This dataset consists of 3,168 recorded voice samples from both male and female speakers, containing various acoustic properties.

To load the dataset in R, simply use the following command:

load(data.bin)Model Training

Once we’ve got our dataset, we need to train our model using machine learning algorithms. The training phase involves teaching the computer to recognize patterns within the voice samples, similar to a coach instructing athletes to improve their performance based on specific techniques.

Accuracy Levels

The accuracy of different models reflects their effectiveness in classifying voices:

- Baseline Algorithm (always male): 50% accuracy

- Logistic Regression: 72% accuracy

- Random Forest: 100% on training data, 87% on test data

- Updated XGBoost: 100% on training, 99% on test data

Notice how narrowing the frequency range to 0hz-280hz significantly improves the model’s accuracy! This demonstrates how nuanced adjustments can yield remarkable results in artificial intelligence.

Acoustic Properties Analyzed

Various acoustic properties play a crucial role in the classification process. Here are some key traits:

- : Mean frequency in kHz

- sd: Standard deviation of frequency

- IQR: Interquartile range of frequencies

- meanfun: Average fundamental frequency across the signal

- modindx: Modulation index

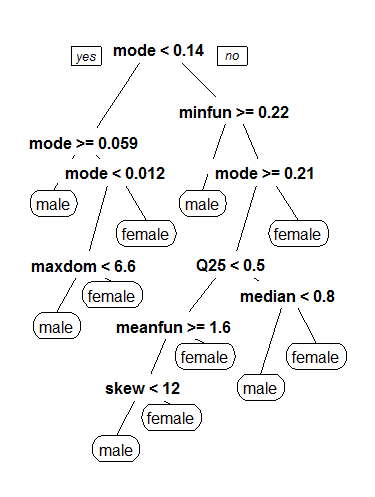

Visualization and Decision Trees

After training the model, we can visualize decision trees that help us understand how specific features contribute to gender classification:

Mean fundamental frequency becomes a significant indicator, with a frequency threshold of 140hz distinguishing male from female classifications.

Troubleshooting and Tips

As you delve into this project, you may encounter some challenges. Here are a few troubleshooting tips:

- Ensure your R environment is properly set up to handle dataset loading and analysis.

- If model accuracy is lower than expected, consider narrowing the frequency range for improved performance.

- If you face difficulties during the model evaluation phase, double-check your dataset for any discrepancies.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

In this guide, we traversed the intricate landscape of voice gender recognition, transforming challenging concepts into digestible segments. With continued exploration and refinement, gender identification by voice will play a significant role in various applications.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.