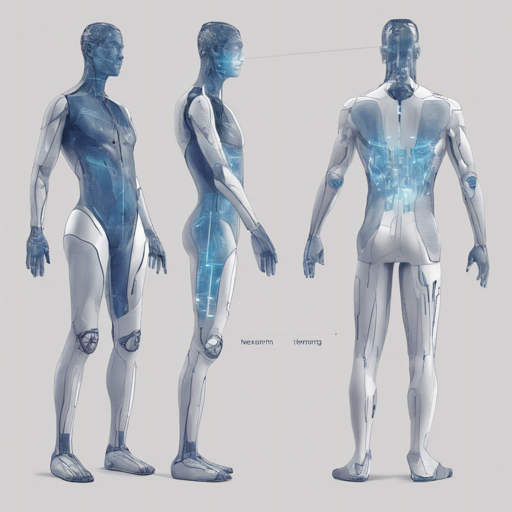

Welcome to the fascinating world of human pose estimation! In this blog, we will explore how to implement the “Deep High-Resolution Representation Learning for Human Pose Estimation” framework. This framework emphasizes maintaining reliable high-resolution representations throughout the pose estimation process.

Understanding the Concept

Imagine you are an artist tasked with painting a group of people in a lively scene. Instead of starting with rough outlines and gradually adding details, you begin with high-resolution sketches of each person, ensuring every feature is captured accurately right from the start. This is akin to how our HRNet operates—maintaining high-resolution representations throughout the entire estimation process. Unlike traditional methods that work with low-resolution inputs, HRNet preserves detail, leading to more accurate keypoint predictions.

Quick Start Guide

Installation

- Install PyTorch v1.0.0 following the official instruction. Note that you should disable cuDNN’s implementations of the BatchNorm layer if using PyTorch v1.0.0.

- Clone the repository into a directory we will refer to as $POSE_ROOT.

- Install dependencies by running:

- Build the necessary libraries:

- Setup COCOAPI by cloning it from COCOAPI.

pip install -r requirements.txtcd $POSE_ROOT/lib

makeData Preparation

For training and testing, you’ll need to prepare the required datasets.

- For MPII data, download it from MPII Human Pose Dataset. Convert annotation files to JSON format and extract them under $POSE_ROOT/data.

- For COCO data, download annotations from COCO Download. Ensure the folder structure mirrors that specified in the documentation.

Testing and Training

You can test the model using the following commands:

python tools/test.py --cfg experiments/mpii/hrnet_w32_256x256_adam_lr1e-3.yaml

TEST.MODEL_FILE models/pytorch/pose_mpii/pose_hrnet_w32_256x256.pthFor training, use:

python tools/train.py --cfg experiments/mpii/hrnet_w32_256x256_adam_lr1e-3.yamlTroubleshooting

If you encounter issues during setup, consider the following troubleshooting tips:

- Verify that your Python version is compatible (Python 3.6 is recommended).

- Ensure that your NVIDIA GPUs are adequately set up and drivers installed.

- If the code doesn’t run as expected, check if your environment paths (for COCOAPI) are correctly set.

- For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Conclusion

Congratulations on delving into high-resolution representation learning for human pose estimation! By following the steps outlined in this guide, you’re now well-equipped to implement and experiment with this technology.