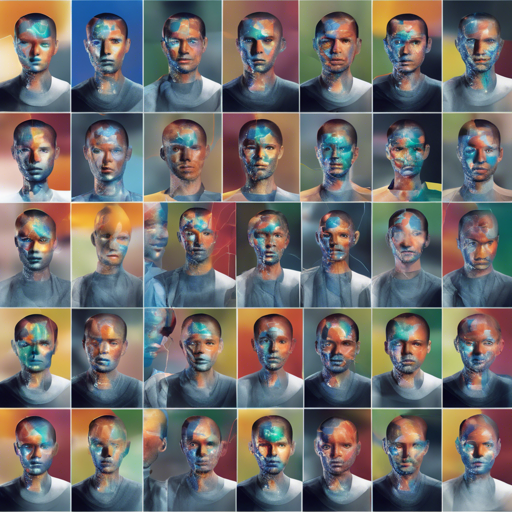

Welcome to this user-friendly guide on implementing the Deep Face Super-Resolution (SR) with Iterative Collaboration between Attentive Recovery and Landmark Estimation, based on the work presented at CVPR 2020. We’ll take you step-by-step through dependencies, dataset preparation, training, testing, and evaluation.

Prerequisites

Before diving into the implementation, ensure you have the following dependencies:

- Python 3 (Recommended: Anaconda)

- PyTorch 1.1.0

- NVIDIA GPU + CUDA

- Python packages: Use the command

pip install numpy opencv-python tqdm imageio pandas matplotlib tensorboardX

Dataset Preparation

To train and test your model, you’ll need to download the datasets and models:

- CelebA dataset can be downloaded here. Unzip the

img_celeba.7zfile. - Helen dataset can be downloaded here. Unzip all five parts of the images.

- Testing sets for both CelebA and Helen can be downloaded from Google Drive or Baidu Drive (extraction code: 6qhx).

Download landmark annotations and pretrained models from the same links as the testing sets to keep everything in order. Make sure to place these files in the correct directories.

Training the Model

To train your model, follow these steps:

- Open your terminal and navigate to your project folder:

cd codepython train.py -opt options/train/train_(DICDICGAN)_(CelebAHelen).jsontensorboard --logdir tb_logger/NAME_OF_YOUR_EXPERIMENTTesting the Model

To generate super-resolution images using the trained model:

- Navigate to your code directory:

cd codepython test.py -opt options/test/test_(DICDICGAN)_(CelebAHelen).jsonresults/test_namedataset_name. The PSNR and SSIM values are recorded in result.json, and average results are stored in average_result.txt.Evaluation of Results

To evaluate the super-resolution results through landmark detection, use the following command:

- Download the required landmark annotation file here.

- Place the downloaded file into the

.modelsdirectory. - Run the evaluation script:

python eval_landmark.py --info_path path_to_landmark_annotations --data_root path_to_result_imagespath_to_result_images/landmark_result.json and averages in landmark_average_result.txt.Troubleshooting

If you encounter issues:

- Ensure all paths in the JSON files are correct.

- Check if your dataset is unzipped and the required files are present.

- Ensure you are using the correct Python and package versions.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.