Welcome to the comprehensive guide on implementing a depth map fusion method based on the ICRA 2019 submission titled Real-time Scalable Dense Surfel Mapping. This innovative approach allows you to fuse a series of depth images, intensity images, and camera poses into a globally consistent model using surfel representation. With the flexibility to support both ORB-SLAM2 and VINS-Mono, this method fits perfectly into various setups, including RGB-D, stereo, or visual-inertial cases.

Understanding Dense Surfel Mapping

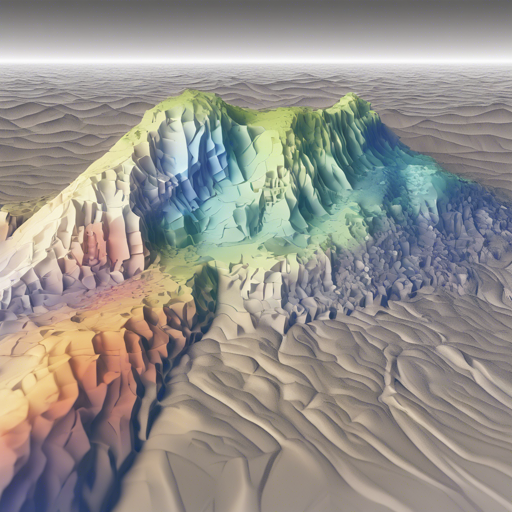

Imagine you are assembling a jigsaw puzzle. Each piece represents a depth image or camera pose and has unique patterns. To create the complete picture, you must connect these pieces together, ensuring they fit seamlessly. That’s precisely what the Dense Surfel Mapping method accomplishes—it cleverly combines individual depth maps and camera information to create a coherent model of an environment.

The goals for this fusion method are as follows:

- Support for Loop Closure: Just like checking if your puzzle pieces match the picture on the box, this method allows for revisiting and correcting earlier assembly steps to ensure overall consistency with other SLAM methods.

- Efficient Resource Usage: Though it’s a complex task, this method doesn’t overstep your CPU and memory resources—think of it as carefully managing your workspace while working on the puzzle.

- Scalability: Assembling puzzles can be tricky with larger sets. Similarly, this method is designed to handle expansive environments, making robot navigation feasible.

Getting Started with the Software

Here’s how you can set up the Dense Surfel Mapping system:

- Download the necessary components:

- ORB-SLAM2 (modified version).

- Surfel Fusion system, which you can install within your ROS catkin workspace using the command

catkin_make. - A simple Python publisher for handling the KITTI dataset.

- Get the KITTI dataset (sequence 00) using this link: Dataset.

- Change relevant lines in scripts like

publisher.pyandorb_kitti_launch.shto match your downloaded paths and environment settings.

Running the Code

After installing all components, follow these steps to execute the code:

- Open four terminal windows:

- Run ORB-SLAM2 with

orb_kitti_launch.sh. - Run Surfel Fusion with

roslaunch surfel_fusion kitti_orb.launch. - Run the KITTI Publisher with

rosrun kitti_publisher publisher.py. - Run RViz with

rviz(you can loadrviz_config.rvizto visualize published messages). - Press any key on the publisher’s window to start the process. Press **Esc** to quit.

Saving the Results

To save the reconstructed model, modify the line in surfel_fusion/launch/kitti_orb.launch. Then, interrupt the process using ctrl+c in the Surfel Fusion terminal, and it will save the meshes to your predefined path. Visualize the saved mesh using CloudCompare or other supported software.

Troubleshooting

If you encounter any issues, make sure to:

- Double-check your dataset paths in the respective scripts.

- Ensure all software components are correctly installed and compiled.

- Refer back to the README files for specific instructions.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Implementing the Depth Map Fusion method is an exciting journey that brings together computer vision and robotics in real-time applications. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.