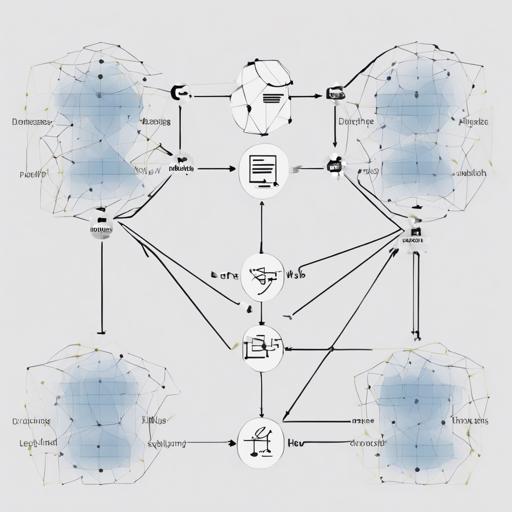

Extracting relations among entities within a document can be as intricate as untangling a web of connections. This blog will guide you through the implementation of the Double Graph Based Reasoning approach, specifically using the Graph Aggregation-and-Inference Network (GAIN). This framework uses advanced techniques to facilitate reasoning across multiple sentences in a document, elevating the process of document-level relation extraction.

Understanding the GAIN Framework

To appreciate the GAIN framework, think of it as a sophisticated spider weaving a web. Each silk thread represents a relationship, while each node on the web represents an entity or mention within the document. The sheer number of intersections allows the spider (your GAIN model) to navigate complex paths effectively. Unlike sentence-level extraction, GAIN enables the spider to span across the entire web, taking into account multiple threads to grasp a clearer picture of the relational landscape.

Package Overview

In your GAIN package, you’ll find the following components:

- checkpoint: Store model checkpoints for later use.

- fig_result: Plot AUC curves for performance review.

- logs: Maintain logs of training and evaluation.

- models:

GAIN.py:The GAIN model for GloVe or BERT versions.GAIN_nomention.py:An ablation model without mention graphs.

config.py:Manage command line arguments.data.py:Define datasets and dataloaders for GAIN.test.py:Evaluation scripts.train.py:Scripts for training the model.utils.py:Miscellaneous tools for training and evaluation.- PLM: Contains pre-trained language models like BERT_base.

System Requirements

Before embarking on your journey with GAIN, ensure you have the proper environment set up:

- Python:

3.7.4 - Cuda:

10.2 - Ubuntu:

18.0.4

Installation Dependencies

The following libraries must be installed for the project to run smoothly:

numpy(version1.19.2)matplotlib(version3.3.2)torch(version1.6.0)transformers(version3.1.0)dgl-cu102(version0.4.3)scikit-learn(version0.23.2)

Note: Ensure that dgl = 0.5 is avoided, as it is not compatible with the current codebase.

Preparing Your Dataset

Step 1: Downloading Data

Begin by downloading the data from the Google Drive link provided by the DocRED authors. After downloading, move these files into your data directory:

train_annotated.jsondev.jsontest.jsonword2id.jsonner2id.jsonrel2id.jsonvec.npy

Step 2: (Optional) Pre-trained Language Models

If you wish to use pre-trained language models, follow the steps in this link to download the necessary files (e.g., pytorch_model.bin, config.json, vocab.txt) into the PLMbert-????-uncased directory.

Training Your Model

To start training, navigate to the code directory and run the appropriate shell script. For instance:

bash cd code .runXXX.sh gpu_idReplace XXX with the desired script name, such as GAIN_BERT. You can monitor the logs using:

tail -f -n 2000 logs/train_xxx.logEvaluating Your Model

Evaluation is equally simple. Change to your code directory, then run:

bash cd code .evalXXX.sh gpu_id threshold(optional)Again, replace XXX with the script name and use threshold = -1 (default) for optimal results. To monitor logs, use:

tail -f -n 2000 logs/test_xxx.logSubmitting Results to CodaLab

Once you have your test results:

- Rename the json output file to

result.jsonand compress it toresult.zip. - Submit this file to CodaLab.

Troubleshooting

If you encounter issues during installation or execution, consider the following troubleshooting tips:

- Ensure all required dependencies are installed and compatible versions are used.

- Check your dataset file paths to confirm they are correct.

- Refer to model logs for detailed error messages which can guide your next steps.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.