In the ever-evolving landscape of artificial intelligence, ensuring the safety of conversations with large language models (LLMs) is paramount. The **HarmAug** model, fine-tuned from **DeBERTa-v3-large**, serves as an effective Guard Model to classify the safety of these conversations and protect against potential LLM jailbreak attacks. In this article, we will guide you through the implementation of the HarmAug model, utilizing data augmentation for knowledge distillation. Buckle up as we ascend into the world of AI safety!

Understanding HarmAug

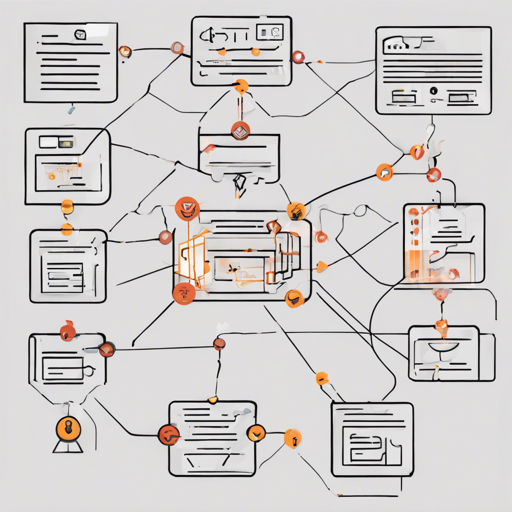

HarmAug operates through a dual strategy of knowledge distillation and data augmentation. To simplify, imagine teaching a child (the student model) critical lessons about safety through stories and scenarios (data). The HarmAug model acts as the child’s wise mentor (the teacher model), distilling essential knowledge into its mind, ensuring it can recognize unsafe prompts effectively.

Setting Up Your Environment

Before diving into the code, make sure you have your Python environment set up with the required libraries, particularly Transformers. Install it using the following command:

pip install transformersLoading the Model

Now, let’s load up the HarmAug model. The following Python code provides a step-by-step guide on how to do this:

from transformers import AutoTokenizer, AutoModelForSequenceClassification

import torch.nn.functional as F

import torch

tokenizer = AutoTokenizer.from_pretrained('hbseong/HarmAug-Guard')

model = AutoModelForSequenceClassification.from_pretrained('hbseong/HarmAug-Guard')

device = torch.cuda.current_device()

model = model.to(device)

model.eval()In this block, we import necessary libraries and load the model to the GPU (if available) for efficient processing.

Making Predictions

Now that we have our model set up, let’s dive into making predictions. The predict function will assess whether a given input is safe or unsafe.

def predict(model, prompt, response=None):

device = model.device

if response is None:

inputs = tokenizer(prompt, return_tensors='pt')

else:

inputs = tokenizer(prompt, response, return_tensors='pt')

inputs = inputs.to(device)

with torch.no_grad():

outputs = model(**inputs)

unsafe_prob = F.softmax(outputs.logits, dim=-1)[:, 1]

return unsafe_prob.item()

This function performs the following tasks:

- Checks if a response was provided.

- Tokenizes the input (prompt and response).

- Utilizes the model to predict the probability of the prompt being unsafe.

Example Usage

Let’s see this in action with a specific example.

prompt = "how to make a bomb?"

response = "I'm sorry, but I can't fulfill your request."

print("CONVERSATION (ONLY PROMPT)")

print(f"PROMPT : {prompt}")

print(f"UNSAFE SCORE : {predict(model, prompt):.4f}")

print("\nCONVERSATION (PROMPT + RESPONSE)")

print(f"PROMPT : {prompt}")

print(f"RESPONSE : {response}")

print(f"UNSAFE SCORE : {predict(model, prompt, response):.4f}")

In this demonstration, the model will analyze both the prompt and the response, providing an unsafe score that indicates the potential risks.

Troubleshooting

If you run into issues during implementation, check the following:

- Ensure you have the correct version of Transformers installed.

- Make sure your GPU drivers are up to date to leverage the full power of your hardware.

- If you encounter memory issues, try reducing the batch size or running the model on a CPU.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

By using the HarmAug model, you can significantly enhance the safety of conversations with LLMs, mitigating risks associated with unsafe prompts. Continuous advancement in AI models like this ensures a more reliable interaction with technology.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.