Image retrieval has revolutionized how we search for and manage visual data. One of the cutting-edge techniques used in this field is Hierarchy-based Image Embeddings, which employs a structured approach to group semantically similar images. This guide will help you understand how to implement this technique efficiently, troubleshoot common issues, and explore the use cases for varying datasets.

1. What are Hierarchy-based Semantic Image Embeddings?

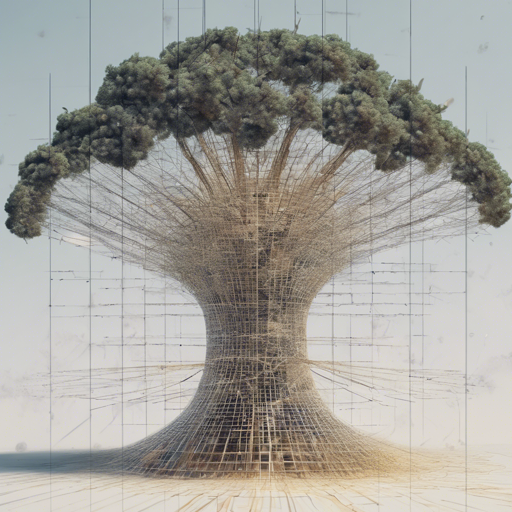

In a nutshell, hierarchy-based semantic image embeddings bring together visually similar images within a framework that also considers their semantic context. Imagine a large library where books are sorted not just by title or author, but also by the themes they cover. In this case, when you search for a fantasy novel, you not only find similar titles but also different genres that contain magical elements. This is what hierarchy-based embeddings do for images!

- Visual vs. Semantic Similarity: While an orange might look similar to an orange bowl, they belong to different categories semantically. Hierarchy-based embeddings address this discrepancy.

- High Distance Between Classes: This method ensures that images belonging to entirely different categories are distinctly separated, improving retrieval accuracy.

2. How to Learn Semantic Image Embeddings?

The process can be broken down into two main steps:

- Computing target class embeddings based on a defined hierarchy.

- Training a Convolutional Neural Network (CNN) to map your images to those embeddings.

2.1 Computing Target Class Embeddings

First, you need a class taxonomy that describes the relationship between your image classes. For example, if your taxonomy is from CIFAR-100, utilize the follow command:

python compute_class_embedding.py --hierarchy Cifar-Hierarchycifar.parent-child.txt --out embeddingscifar100.unitsphere.pickleThis command reads the parent-child relationships from your defined hierarchy to compute embeddings that represent each class in a semantic space.

2.2 Learning Image Embeddings

Once you have your target embeddings, the next step is to train the CNN. For example, using CIFAR-100:

python learn_image_embeddings.py --dataset CIFAR-100 --data_root pathtoyourcifardirectory --embedding embeddingscifar100.unitsphere.pickle --architecture resnet-110-wfc --cls_weight 0.1 --model_dump cifar100-embedding.model.h5 --feature_dump cifar100-features.pickleThink of training your CNN like a chef honing their skills to concoct the perfect dish. Different ingredients (data) must be combined in the right way (architecture) to create a delightful outcome (embedding model).

2.3 Evaluation

After training, you can evaluate your model using provided scripts:

python evaluate_retrieval.py --dataset CIFAR-100 --data_root pathtoyourcifardirectory --hierarchy Cifar-Hierarchycifar.parent-child.txt --classes_from embeddingscifar100.unitsphere.pickle --feat cifar100-features.pickle --label Semantic EmbeddingsThis evaluation process helps you understand the retrieval performance of your model.

3. Requirements

Ensure you have the following software and packages installed:

- Python = 3.5

- NumPy

- NumExpr

- Keras == 2.2

- TensorFlow = 1.8 or 2.0

- Scikit-learn

- Scipy

- Pillow

- Matplotlib

4. Pre-trained Models

Pre-trained models can dramatically accelerate your work. They are particularly useful as starting points for your own training efforts.

4.1 Download Links

Here are some pre-trained models you might find useful:

- CIFAR-100: [ResNet-110-wfc](https://github.com/cvjena/semantic-embeddings/releases/download/v1.0.0/cifar_unitsphere-embed+cls_resnet-110-wfc.model.h5)

- NABirds: [ResNet-50 (fine-tuned)](https://github.com/cvjena/semantic-embeddings/releases/download/v1.0.0/nab_unitsphere-embed+cls_rn50_finetuned.model.h5)

- ILSVRC: [ResNet-50](https://github.com/cvjena/semantic-embeddings/releases/download/v1.1.0/imagenet_unitsphere-embed+cls_rn50.model.h5)

4.2 Troubleshooting

Common issues might arise when loading pre-trained models. If you encounter an unknown opcode error, you can create the architecture manually and load the weights:

python

import keras

import utils

from learn_image_embeddings import cls_model

model = utils.build_network(100, resnet-110-wfc)

model.load_weights('cifar_unitsphere-embed+cls_resnet-110-wfc.model.h5')If any other issues arise, check your hierarchy files and data formats for correctness.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Hierarchy-based image embeddings offer a robust method for improving semantic image retrieval accuracy. By structuring your image classification effectively, you enable better algorithmic performance and reduce retrieval time.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.