If you’re venturing into the realm of neural networks and optimization, you may have come across the terms INT8 quantization and the Intel® Neural Compressor. This guide will walk you through post-training dynamic quantization using an INT8 PyTorch model based on T5, all while leveraging the impressive libraries from Hugging Face.

Understanding Post-Training Dynamic Quantization

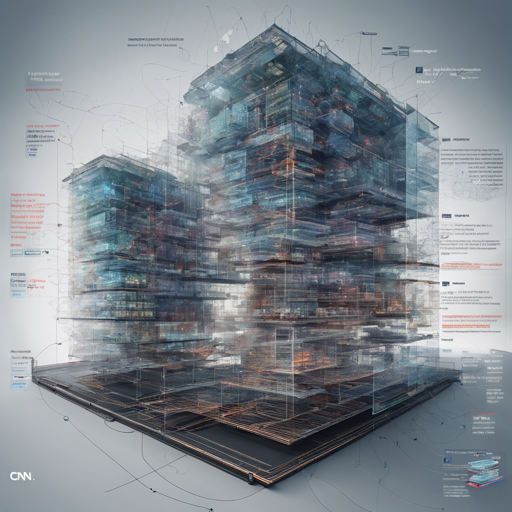

Before we dive into the implementation details, let’s clarify what post-training dynamic quantization means. Imagine you have a well-baked cake that’s been perfectly decorated. The only thing left to do is to minimize its size for transport without compromising much on its taste. Similarly, post-training dynamic quantization helps in reducing the size of your neural network models from FP32 (floating point 32) to INT8 (integer 8) representation, allowing for more efficient model inference and performance on hardware that supports lower bit-width operations.

What You’ll Need

- Python environment set up with PyTorch installed.

- Intel® Neural Compressor installed via GitHub.

- The model and its weights available from Hugging Face Model Hub.

Step-by-step Implementation

Follow these steps to load and quantize your model:

from optimum.intel import INCModelForSeq2SeqLM

model_id = 'Intelt5-base-cnn-dm-int8-dynamic'

int8_model = INCModelForSeq2SeqLM.from_pretrained(model_id)Evaluating Your Model

Once you have your model loaded, you might be curious about how well it performs relative to its FP32 counterpart. Here’s a quick summary of the evaluation:

- Accuracy (eval-rougeLsum):

- INT8: 36.5661

- FP32: 36.5959

- Model Size:

- INT8: 326M

- FP32: 892M

Troubleshooting

If you encounter issues during the implementation, here are some troubleshooting ideas:

- Ensure that your Python environment is correctly set up with all required dependencies before running your code.

- Check for any compatibility issues between the Intel® Neural Compressor library and your version of PyTorch.

- If the model fails to load, verify that your model_id is correctly specified, ensuring there are no typos.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

In conclusion, transforming an FP32 model to INT8 through post-training dynamic quantization with the help of Intel® Neural Compressor and Hugging Face tools is not only a functional necessity but also a technical wonder that can yield better performance while saving resources.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.