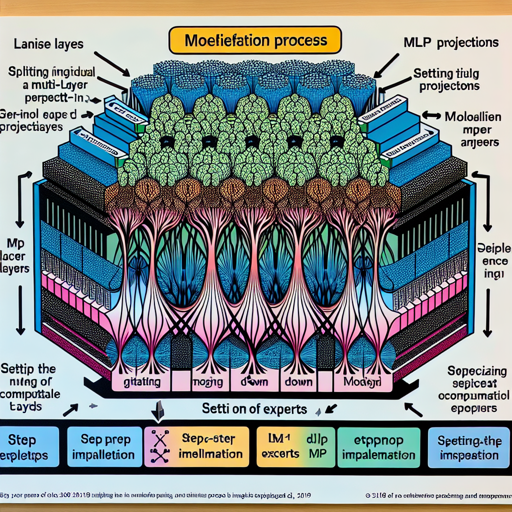

MoEification (Mixture of Experts) is an innovative technique that allows the splitting of individual MLP layers in a dense language model into multiple experts. This blog will guide you through the process of implementing MoEification, explore its importance, and offer troubleshooting tips along the way.

What is MoEification?

MoEification involves dividing the MLP projections (including gate, down, and projection layers) of a language model into separate, specialized experts. This technique is designed to enhance the model’s ability to handle different computational needs based on the complexity of the token predictions.

Step-by-Step Guide to Implementing MoEification

- Set the Number of Experts: Decide how many experts you want to create. In our case, we’ll use 8 experts.

- Split the MLP Projections: Divide the MLP layers into the desired number of experts. Each layer will have its own expert to represent specific features.

- Adjust the Down-Projection Parameters: Increase the parameter values for the down projection by the total number of experts. This ensures that the model’s activation outputs, when averaged together, maintain coherence.

- Initialize Router Layers with Zeroes: Ensure that the router layers start with zero values to avoid biases caused by random initialization. This guarantees that each expert’s usage remains equal at the outset.

Understanding the Effects through Analogy

Think of a language model as a restaurant with a team of chefs, each specializing in different dishes (i.e., experts). If you have 8 skilled chefs (experts) in the kitchen, they can prepare a wide variety of meals simultaneously, ensuring quick and high-quality service (high coherence of output). However, if you only have 4 chefs working and they’re overwhelmed, the quality may drop significantly as they struggle to manage the menu (low coherence). By setting up your environment to leverage all 8 chefs appropriately, you ensure that every dish is crafted to perfection.

Why Implement MoEification?

This technique allows you to adaptively train models so they can handle various expert counts based on the complexity of token predictions. As more computation is reserved for challenging tokens, you can optimize resource usage without sacrificing performance. This means we can expect enhanced efficiency in how language models predict future tokens.

Troubleshooting Tips

- If the model exhibits incoherence or unpredictability with fewer experts activated, revisit the initialization of your router layers. Make sure they are initialized to zero to achieve balanced expert usage.

- Check the scaling of your parameters. If the down-projection values aren’t multiplied properly by the number of experts, it could lead to imbalanced outputs.

- If you encounter performance issues, experiment with different expert counts and visualize the outputs with various configurations to understand which setups yield the best results.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.