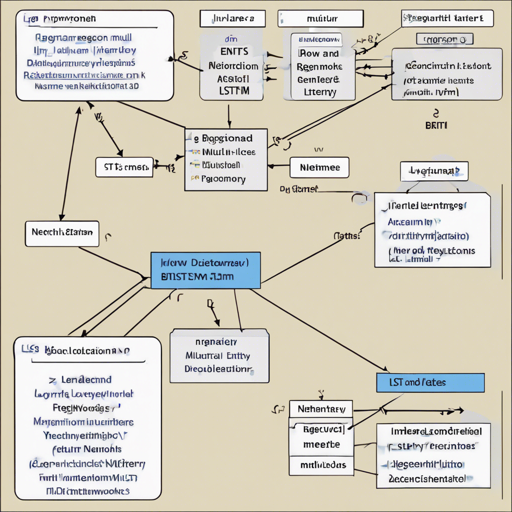

In this blog post, we will explore how to implement Named Entity Recognition (NER) using a two-layer bidirectional LSTM network. This technology is powered by deep learning methods to classify named entities such as people, organizations, and locations in a text corpus. We will guide you through the entire process, from setting up your environment to running the model and analyzing results.

Getting Started

Before diving into the details, make sure you have the following prerequisites set up in your development environment:

- TensorFlow: An essential framework for deep learning.

- Gensim: For word embedding models.

- Hindi Language Support: Optional, use this tool if processing Hindi text.

Generating the Embedding Model

The foundation of our NER model is an embedding model that transforms sentences into a format suitable for our neural network. Let’s break it down:

Embedding Types

- Word2Vec: Use

wordvec_model.pyto create your model. You can either train a new model with a corpus or load an existing one from a gensim binary file. - GloVe: Utilize

glove_model.pyto generate your embedding model. Supply your corpus and obtain vectors from a glove vector file. - LSTM: Use

rnnvec_model.py, this requires a corpus to train. - Hindi Support: Convert your Hindi corpus using

hindi_util.py.

Preparing Inputs

With your embedding models in place, you need to format your data for the model:

- Use

resize_input.pyto adjust your dataset to a maximum sentence length. - Use the trained embedding model along with either

get_conll_embeddings.pyorget_icon_embeddings.pyto prepare and pickle your inputs.

Training the Deep Neural Network

Now it’s time for the exciting part: training your model. Here’s how it works:

Imagine you are teaching a child to recognize objects. At first, you show them a variety of objects (training data), and you encourage them as they try to identify each one by pointing out its features (hyperparameters). They gradually learn through trial and error until they master the skill. In our case, we have a similar process whereby:

- The LSTM acts as our child, learning to classify names based on input patterns.

- The softmax layer helps in finalizing the classification output.

- Adam Optimizer ensures the model learns at a pace that brings best results.

Run your model using model.py to start training and see progress in optimizing F1 scores.

Evaluating Results

Keep track of your results. Here’s a quick summary of the evaluation process:

- Use predefined metrics like Precision, Recall, and F1 Score to assess the model’s performance.

- Be mindful of how different embedding sizes impact performance.

Troubleshooting

While setting up your project, you may run into some issues. Here are some troubleshooting tips:

- If your model isn’t performing well, consider adjusting the hyperparameters noted at the top of

main.py. - Ensure your corpus is correctly formatted and free of discrepancies that might confuse the model.

- In case of errors during model training, check the paths for your input files in

input.py.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

By following these steps, you’ll be well on your way to implementing a robust Named Entity Recognition system using deep learning techniques. From generating embedding models to preparing data and training your own LSTM, this guide covers all fundamental aspects essential for success.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.