The Swin Transformer is an innovative architecture that adapts the Transformer model for computer vision tasks. If you’re keen on exploring this powerful tool, this guide will walk you through the installation and usage of Swin Transformer in PyTorch. Let’s dive in!

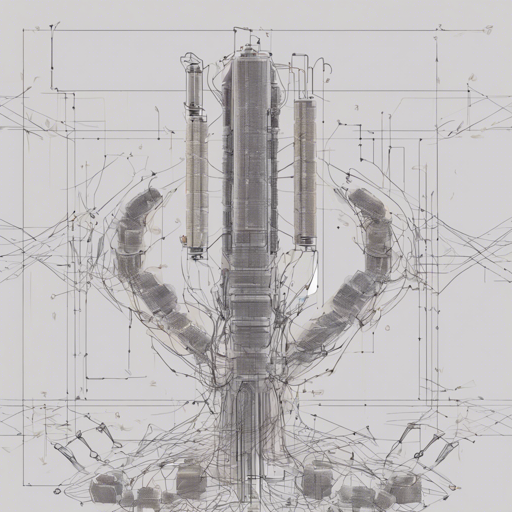

Understanding the Swin Transformer

Think of the Swin Transformer like a Swiss army knife for images. Just as a Swiss army knife has various tools for different tasks, the Swin Transformer is designed with multiple layers to handle various scales and complexities within images. This architecture can efficiently zoom in and out to identify features within high-resolution contexts, allowing it to perform admirably on tasks like image classification and object detection.

Installation

To start using the Swin Transformer, you need to install the necessary packages. Here’s how:

- Open your terminal or command prompt.

- Run the following command to install the package directly:

pip install swin-transformer-pytorchpip install -r requirements.txtUsage

Now that you have everything set up, let’s proceed to use the Swin Transformer in your PyTorch project:

import torch

from swin_transformer_pytorch import SwinTransformer

# Initialize the model

net = SwinTransformer(

hidden_dim=96,

layers=(2, 2, 6, 2),

heads=(3, 6, 12, 24),

channels=3,

num_classes=3,

head_dim=32,

window_size=7,

downscaling_factors=(4, 2, 2, 2),

relative_pos_embedding=True

)

# Create a dummy input tensor

dummy_x = torch.randn(1, 3, 224, 224)

logits = net(dummy_x) # (1,3)

print(net)

print(logits)Parameter Breakdown

Let’s clarify the parameters used in the Swin Transformer:

- hidden_dim: The dimension of hidden representations.

- layers: The number of layers in each stage; should be even numbers.

- heads: The number of attention heads in each stage.

- channels: The number of channels in your input image.

- num_classes: The number of output classes.

- head_dim: Dimension of each attention head.

- window_size: The size of the sliding window; must align with image dimensions after downscaling.

- downscaling_factors: Factors by which to downscale the image at each transformer stage.

- relative_pos_embedding: To use learnable embeddings for relative positioning.

Troubleshooting

While working with the Swin Transformer, you might encounter some issues. Here are a few troubleshooting tips:

- If you run into installation issues, make sure your pip is updated.

- Ensure that the version of PyTorch you’re using is compatible with swin-transformer-pytorch.

- If you experience unexpected output, validate the shape and dimensions of your input tensor. It should match the expected input format.

- Refer back to the code parameters to ensure they align with your dataset and task structure.

- For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Final Thoughts

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

By employing the Swin Transformer, you gain a powerful weapon in your computer vision toolbox. Happy coding!