In the world of digital communication, safeguarding personal information has become increasingly crucial. Today, we’ll walk you through the Hide-and-Seek model developed by Tencent’s Security Xuanwu Lab, an innovative approach to being discreet in data handling. This guide will offer a step-by-step process to implement this model effectively.

What is the Hide-and-Seek Model?

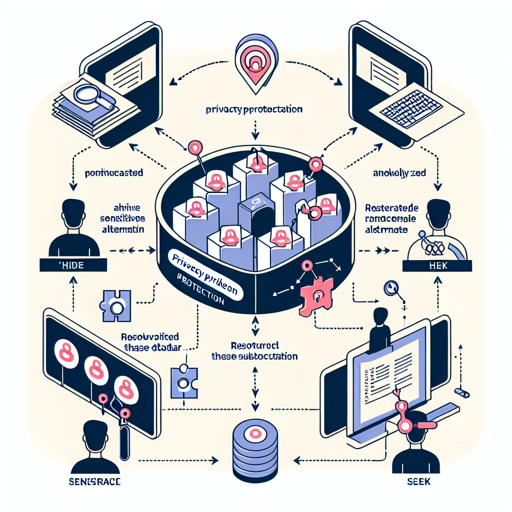

The Hide-and-Seek privacy protection model consists of two primary tasks: hide and seek. The hide component anonymizes sensitive entities by replacing them with random alternatives, while the seek component restores these substitutions to align with the original text. This dual process enhances privacy while maintaining the integrity of the information shared.

Getting Started with Hide-and-Seek

To implement the Hide-and-Seek model, you’ll need to follow these steps:

- Set Up Your Environment: Ensure that Python and necessary libraries are installed.

- Clone the Repository: Access the Github Repo to download the model code.

- Install Required Packages: Use the following commands to install the necessary libraries:

pip install torch==2.1.0+cu118

pip install transformers==4.35.0Understanding the Code

Let’s delve deeper into the code sample provided for the hide and seek process. Imagine you’re a magician performing a trick. You take a card (this represents sensitive input), replace it with another card (anonymization), and at the end of the trick, you reveal the original card again (information restoration).

Example of Hiding Information

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("SecurityXuanwuLab/HaS-820m")

model = AutoModelForCausalLM.from_pretrained("SecurityXuanwuLab/HaS-820m").to('cuda:0')

hide_template = """Paraphrase the text:%s\n\n"""

original_input = "张伟用苹果(iPhone 13)换了一箱好吃的苹果。"

input_text = hide_template % original_input

inputs = tokenizer(input_text, return_tensors='pt').to('cuda:0')

pred = model.generate(**inputs, max_length=100)

pred = pred.cpu()[0][len(inputs['input_ids'][0]):]

hide_input = tokenizer.decode(pred, skip_special_tokens=True)

print(hide_input)

# output: 李华用华为(Mate 20)换了一箱美味的橙子。

In this snippet, the original input “张伟用苹果(iPhone 13)换了一箱好吃的苹果。” is transformed into “李华用华为(Mate 20)换了一箱美味的橙子。” The identity is hidden, showcasing how effective the process is.

Example of Seeking Information Back

hide_input = "前天,'2022北京海淀·颐和园经贸合作洽谈会成功举行,各大媒体竞相报道了活动盛况。"

original_input = "昨天,’2016苏州吴中·太湖经贸合作洽谈会成功举行,各大媒体竞相报道了活动盛况。"

input_text = seek_template % (hide_input, hide_output, original_input)

inputs = tokenizer(input_text, return_tensors='pt').to('cuda:0')

pred = model.generate(**inputs, max_length=512)

pred = pred.cpu()[0][len(inputs['input_ids'][0]):]

original_output = tokenizer.decode(pred, skip_special_tokens=True)

print(original_output)

# output: 2016苏州吴中·太湖经贸合作洽谈会成功举办,各大媒体广泛报道

Troubleshooting Tips

Here are some troubleshooting ideas to help you navigate common issues you may encounter:

- Performance Issues: If the model runs slowly, ensure your system has adequate resources (CPU/GPU) and check for any potential bottlenecks in dependency installations.

- Compatibility Errors: Ensure that your Python version and all libraries are compatible. Sometimes newer versions may introduce changes that cause errors.

- Memory Errors: If you encounter memory-related issues, try reducing the batch size or clearing the cache periodically.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.