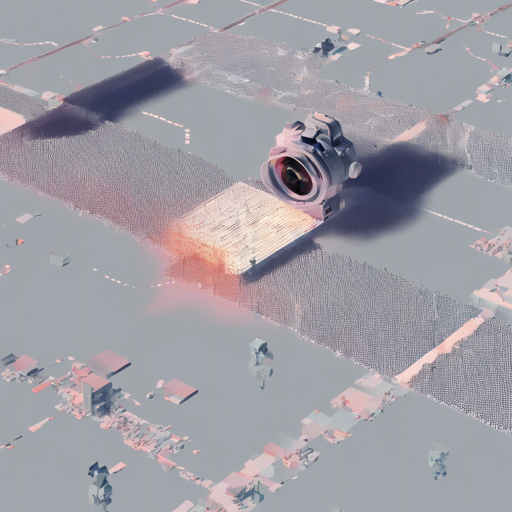

In the rapidly evolving world of artificial intelligence, depth completion from visual-inertial odometry stands out as a groundbreaking advancement. This article breaks down how to implement this technique, developed in the VOICED project and published in leading robotics conferences. Let’s explore the depths of unsupervised depth completion step by step!

About Sparse-to-Dense Depth Completion

Sparse-to-dense depth completion involves inferring a dense depth map from an RGB image along with sparse depth measurements. Imagine being in a dark room with scattered candles; the placement of the candles (sparse depth measurements) represents what you can see of the room (the RGB image), but to get a full picture of the room (the dense depth map), you need additional depth information. That’s precisely what we’re achieving here!

About VOICED

VOICED is an unsupervised method that utilizes a scaffolding layout of the scene for depth completion. Think of it as placing the foundation of a building (the scaffolding) based on the structuring you already have (sparse depth measurements), and then refining it with lighter materials as you construct the building (the light-weight network). This methodology is efficient and achieves state-of-the-art performance while reducing parameter complexity.

Setting Up for Tensorflow Implementation

To start with the Tensorflow implementation of VOICED, follow these steps:

- Ensure you have Ubuntu 16.04, and set up Python 3.5 or 3.6 with Tensorflow 1.14 or 1.15 on CUDA 10.0.

- Download and navigate to the VOICED Tensorflow repository.

- Treat the ‘tensorflow’ directory as the root for optimal functionality.

Setting Up for PyTorch Implementation

The re-implementation in PyTorch is the recommended approach, given current support and performance. Follow these steps:

- Prepare your environment using Ubuntu 20.04, with Python 3.7 or 3.8 and PyTorch 1.10 running on CUDA 11.1.

- Access the VOICED PyTorch repository for the necessary source code.

- Ensure the ‘pytorch’ directory serves as the root of your setup, keeping in mind that data handling may differ from the Tensorflow version.

Related Projects

Expand your knowledge and capabilities with these related projects:

- ScaffNet – Learning Topology from Synthetic Data for Unsupervised Depth Completion.

- KBNet – Unsupervised Depth Completion with Calibrated Backprojection Layers.

- MonDi – A monitored distillation method for depth completion.

Troubleshooting Tips

As with any project, you may face challenges. Here are some helpful troubleshooting ideas:

- If you encounter errors during installation, ensure you have the correct versions of Python and Tensorflow/PyTorch installed. Mismatched versions can cause compatibility issues.

- When debugging your code, make use of print statements to trace variables and ensure that the data flow is as expected.

- If your dense depth maps are not producing accurate results, revisit the parameters set in your model; they may need adjustments for specific datasets.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Implementing unsupervised depth completion from visual-inertial odometry opens doors to new possibilities in computer vision. By following this guide, you’ll be well-equipped to dive into the depths of this fascinating field.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.