In this article, we’ll walk through how to set up YOLOv5 combined with the DeepSort algorithm for object detection and tracking. The journey begins with understanding the key components and then delving into the code. So buckle up and prepare to enhance your computer vision projects!

Overview

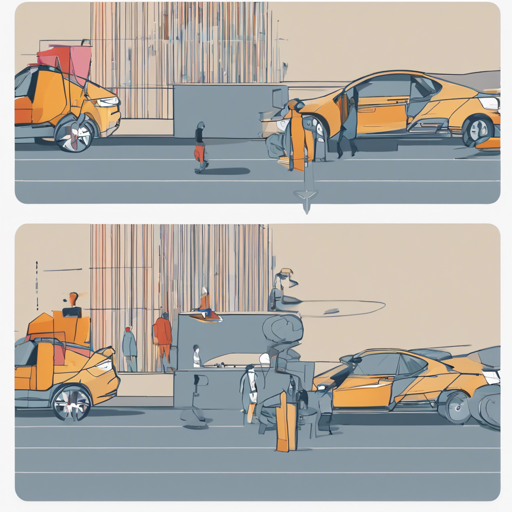

YOLOv5 is a real-time object detection model that identifies and classifies objects in images and videos, while DeepSort adds the capability of tracking those objects across frames. Together, they create a powerful duo for applications in surveillance, robotics, and more.

Setting Up the Detector

Let’s break down the class implementation. The primary role of the Detector class is to initialize the model and set up preprocessing and detection.

class Detector(baseDet):

def __init__(self):

super(Detector, self).__init__()

self.init_model()

self.build_config()

Think of the Detector class like the foundation of a house. Each method represents components that are essential for the house to be structurally sound:

- The init_model method: This is where we load the architecture (like walls and roof) of the YOLO model and prepare the environment (GPU or CPU).

- The preprocess method: This is akin to painting the walls (preparing the input image), making it ready for display.

- The detect method: The actual functionality, determining what objects are present in the image.

def init_model(self):

self.weights = 'weights/yolov5m.pt'

self.device = 0 if torch.cuda.is_available() else 'cpu'

self.device = select_device(self.device)

model = attempt_load(self.weights, map_location=self.device)

model.to(self.device).eval()

model.half()

self.m = model

self.names = model.module.names if hasattr(model, 'module') else model.names

def preprocess(self, img):

img0 = img.copy()

img = letterbox(img, new_shape=self.img_size)[0]

img = img[:, :, ::-1].transpose(2, 0, 1)

img = np.ascontiguousarray(img)

img = torch.from_numpy(img).to(self.device)

img = img.half()

if img.ndimension() == 3:

img = img.unsqueeze(0)

return img0, img

The detect method orchestrates the detection process using the preprocessed image:

def detect(self, im):

im0, img = self.preprocess(im)

pred = self.m(img, augment=False)[0]

pred = pred.float()

pred = non_max_suppression(pred, self.threshold, 0.4)

pred_boxes = []

for det in pred:

if det is not None and len(det):

det[:, :4] = scale_coords(img.shape[2:], det[:, :4], im0.shape).round()

for *x, conf, cls_id in det:

lbl = self.names[int(cls_id)]

if not lbl in ['person', 'car', 'truck']:

continue

x1, y1 = int(x[0]), int(x[1])

x2, y2 = int(x[2]), int(x[3])

pred_boxes.append((x1, y1, x2, y2, lbl, conf))

return im, pred_boxes

Integrating DeepSort

The next step involves using the DeepSort algorithm to track the detected objects across frames. This means that not only will you know what objects are present, but you’ll also be able to follow them, like keeping tabs on all your friends at a large gathering!

deepsort = DeepSort(cfg.DEEPSORT.REID_CKPT,

max_dist=cfg.DEEPSORT.MAX_DIST,

min_confidence=cfg.DEEPSORT.MIN_CONFIDENCE,

nms_max_overlap=cfg.DEEPSORT.NMS_MAX_OVERLAP,

max_iou_distance=cfg.DEEPSORT.MAX_IOU_DISTANCE,

max_age=cfg.DEEPSORT.MAX_AGE,

n_init=cfg.DEEPSORT.N_INIT,

nn_budget=cfg.DEEPSORT.NN_BUDGET,

use_cuda=True)

Running the Demo

To test this implementation, you can run the demo script:

bash python demo.py

After running, you should see results indicating which objects are detected and tracked.

Troubleshooting Tips

- If you encounter a problem with model weights, ensure that the path to

weights/yolov5m.ptis correct. - Make sure you have the necessary libraries installed, such as torch and numpy. Running

pip install -r requirements.txtcan help! - If performance is slow, ensure that your environment supports CUDA for GPU processing.

- For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

At **fxis.ai**, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.