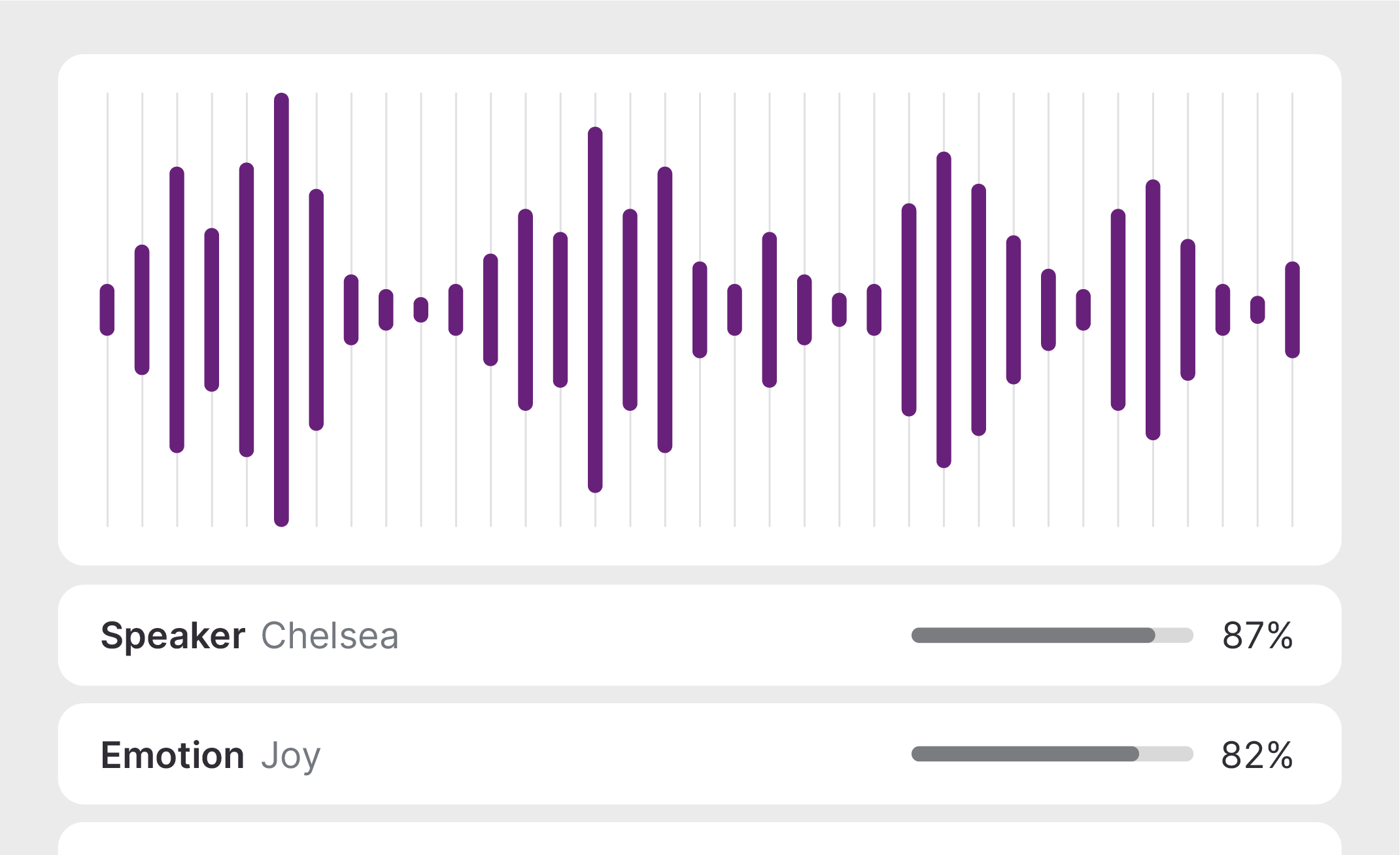

Are you ready to elevate your mobile app with real-time speech processing capabilities? With the HuggingFace-WavLM-Base-Plus model based on Microsoft’s WavLM, you can do just that! This guide will walk you through the installation and usage of this powerful model optimized for mobile deployment.

Understanding the Model

The HuggingFace-WavLM-Base-Plus is designed for real-time speech processing and is particularly effective on Qualcomm devices such as the Samsung Galaxy S23 Ultra. Think of it like a highly skilled translator that efficiently converts spoken words into text while maintaining rapid response times and high accuracy.

Installation Steps

To get started with the HuggingFace-WavLM-Base-Plus model, you need to install it as a Python package. Follow these simple steps:

- Open your command line interface.

- Execute the following command:

pip install "qai-hub-models[huggingface_wavlm_base_plus]"Configuring the Qualcomm® AI Hub

Once you have the model installed, the next step is to configure the Qualcomm AI Hub:

- Sign in to Qualcomm® AI Hub with your Qualcomm ID.

- Navigate to Account -> Settings -> API Token to obtain your API token.

- Run the following command in your terminal:

- Refer to the documentation for more details.

qai-hub configure --api_token API_TOKENRunning a Demo

The package includes a straightforward demo. To run it, simply execute:

python -m qai_hub_models.models.huggingface_wavlm_base_plus.demoFor use in environments such as Jupyter Notebook or Google Colab, use the following command instead:

%run -m qai_hub_models.models.huggingface_wavlm_base_plus.demoDeploying Compiled Models

If you want to deploy your model on a cloud-hosted device, follow these steps:

- Compile the model for on-device deployment, similar to how a chef would prepare a complex dish by gathering all necessary ingredients before cooking.

- By utilizing

jit.traceand thesubmit_compile_jobAPI, you can create a tailored version of your model for specific devices. - After compiling, you can profile and verify on-device performance using additional scripts provided in the package.

Troubleshooting

Should you run into any issues during installation or deployment, here are a few troubleshooting tips:

- Make sure you have the most recent version of pip installed. You can upgrade it using

pip install --upgrade pip. - If you encounter a “Module Not Found” error, double-check that the installation command ran without errors, and ensure you are using the correct Python environment.

- For specific incompatibility questions, consulting the license and AI Hub documentation may provide insights.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Additional References

To learn about the underlying mechanisms and architecture, consider reading:

- WavLM: Large-Scale Self-Supervised Pre-Training for Full Stack Speech Processing

- Source Model Implementation

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.