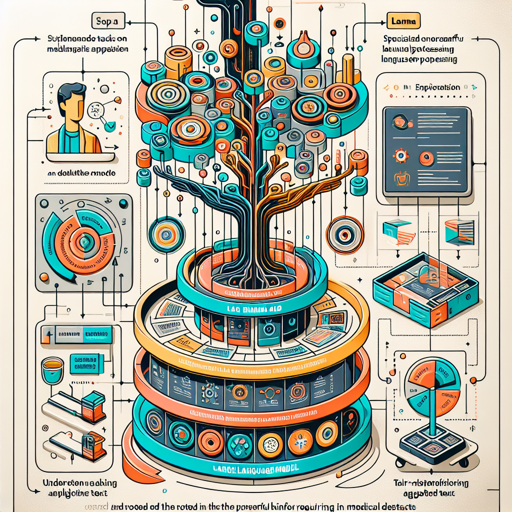

The Llama-3 model, developed by the innovative minds at bongbongs, represents a significant leap in the realm of large language models (LLMs). With its roots in the powerful Llama-3-8B architecture and specialized training on medical datasets, this model is tailored for various applications within the healthcare sector. In this blog, we will explore how to effectively utilize Llama-3 for medical purposes, along with troubleshooting tips to ensure a seamless experience.

Understanding the Llama-3 Model

The Llama-3-8B model is not just another AI; it’s a well-tuned instrument designed for tasks that require extensive knowledge in medical language processing. By fine-tuning this model on curated medical training datasets, it excels at understanding and generating coherent medical-related text.

Utilizing Llama-3 in Medical Applications

Here’s a straightforward guide on how to apply Llama-3 in your medical projects:

- Data Preparation: Ensure your input data is clean and formatted appropriately. Since Llama-3 is trained on medical datasets, medical terms and phrases should be included in your training data.

- Model Integration: Integrate the Llama-3 model with your application. Depending on your programming environment, this could involve setting up libraries or APIs that access the model.

- Testing and Validation: Run your inputs through the model. Validate the outputs with medical professionals to ensure the accuracy and relevance of the information produced.

An Analogy to Understand Model Training

Think of the process of fine-tuning Llama-3 like training a chef. Initially, the chef (the base model) may have general culinary skills, but through rigorous training (fine-tuning), they focus specifically on mastering medical cuisine (medical datasets). Just as the chef learns the nuances of preparing healthy meals with precision, the Llama-3 model has absorbed the specific language and knowledge required to excel in the medical field.

Troubleshooting Common Issues

Even with a robust model like Llama-3, challenges may arise. Here are some common troubleshooting ideas:

- Model Performance: If outputs are irrelevant or incorrect, double-check the training data for completeness and accuracy. Fine-tuning further on specific cases may yield better results.

- Integration Problems: Ensure you have the correct libraries and dependencies installed. Consult documentation or support forums for specific errors.

- Output Quality: Validate model outputs with medical professionals to assess coherence. Adding more diverse medical data for fine-tuning can improve response accuracy.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

With its focused training on medical datasets, Llama-3 offers unprecedented capabilities for various healthcare applications. By carefully preparing your data, integrating the model, and validating outputs, you can harness the power of Llama-3 effectively. Stay abreast of updates and enhancements to enhance your projects continuously.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.