Welcome to this guide on utilizing the Donut model, a cutting-edge tool designed for transforming images into text without relying on traditional OCR methods. This article will provide a user-friendly walkthrough of the model’s structure, its intended uses, and troubleshooting tips to maximize your experience.

Understanding the Donut Model

The Donut model, fine-tuned on the CORD dataset, employs a unique architecture, which consists of a vision encoder (Swin Transformer) and a text decoder (BART). Here’s a simplified analogy to help you understand this:

- Vision Encoder (Swin Transformer): Think of the encoder as a skilled artist examining a picture. It meticulously analyzes every detail and captures the essence of the image, converting it into a structure of information (tensor of embeddings).

- Text Decoder (BART): Once the artist has interpreted the image’s details, the text decoder acts like a master storyteller. It takes the artist’s (encoder’s) insights and carefully crafts a coherent narrative, generating text based on the image analysis.

In technical terms, the encoder outputs an embedding tensor with the shape (batch_size, seq_len, hidden_size), which the decoder then uses to autoregressively generate text.

Getting Started with Donut

To get started with the Donut model, you can refer to the resources provided in the official documentation. Notably, you can access the model from the GitHub repository or delve into the Hugging Face documentation for code examples and detailed guidance.

CORD Dataset Overview

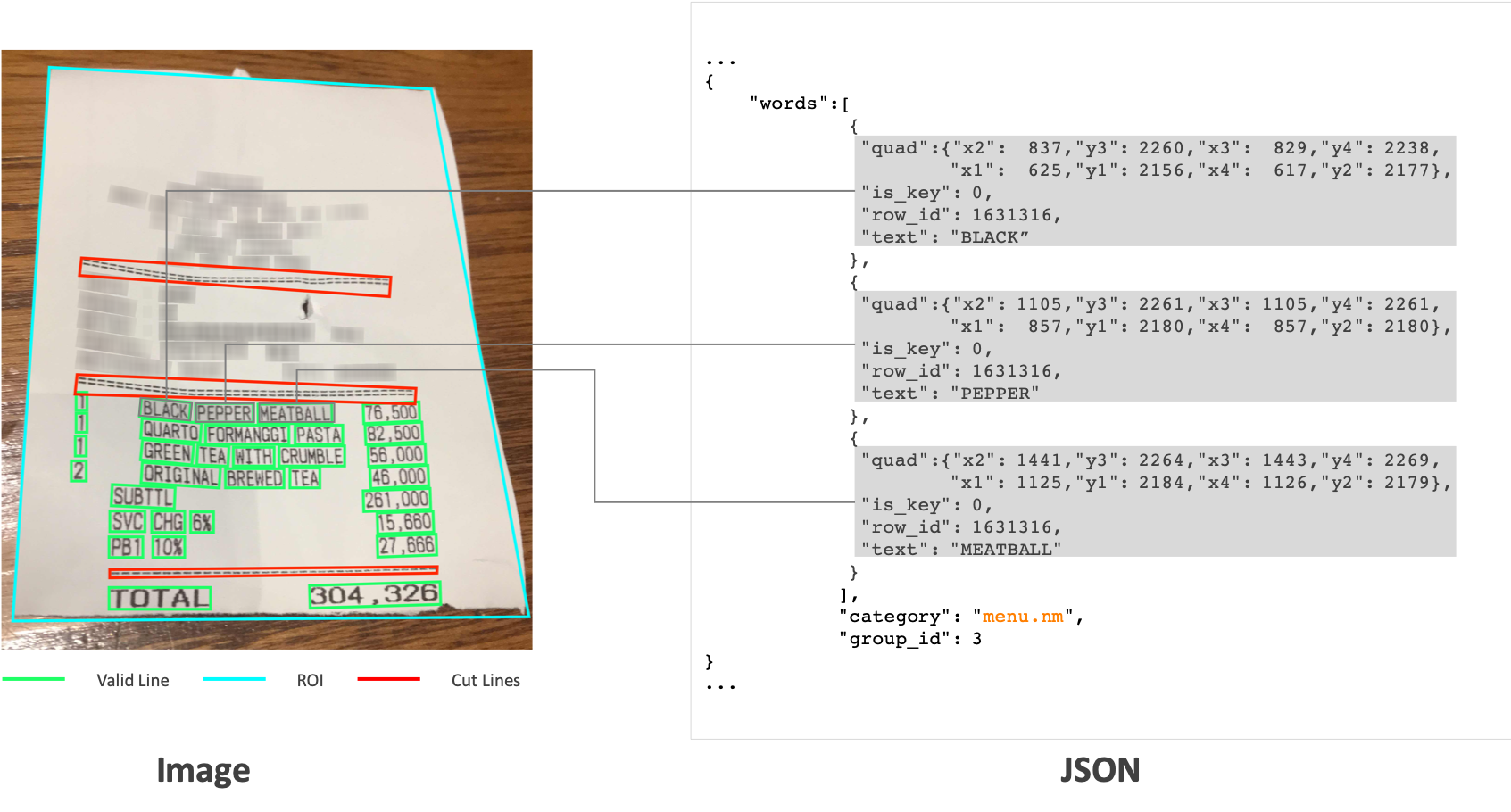

Donut is particularly fine-tuned on the CORD dataset, which is a specialized dataset meant for parsing documents. Essentially, it consists of various receipts, allowing the model to be adept at understanding and extracting crucial information from similar types of documents.

Troubleshooting and Additional Tips

While using the Donut model, you may encounter some bumps along the road. Here are a few troubleshooting ideas to help you out:

- Model Size Issues: Ensure that your input images are appropriately sized. Very large images may pose performance issues.

- Output Quality: The quality of text generated is dependent on the clarity and quality of your input images. Make sure images are sharp and well-lit.

- Dependencies: Confirm that all libraries and dependencies are correctly installed as per the Hugging Face documentation.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Final Thoughts

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.