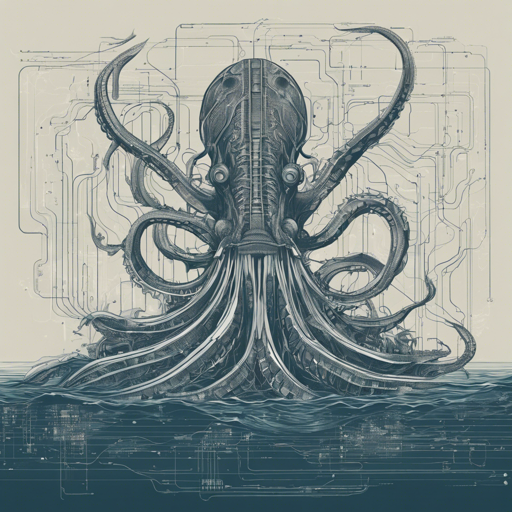

Welcome to the fascinating world of the Kraken model! In this article, we will walk you through loading and utilizing this cutting-edge architecture designed for dynamic text generation. The Kraken model, a collaboration between Cognitive Computations, VAGO Solutions, and Hyperspace.ai, intelligently routes inputs to various experts, allowing for context-appropriate responses.

Understanding the Kraken Architecture

Think of the Kraken architecture like a skilled conductor of an orchestra. Just as a conductor directs musicians to play different parts at the right time, Kraken seamlessly manages multiple causal language models (CLMs), directing input through the appropriate model based on context, thus ensuring harmonious and relevant outputs. The conductor (Kraken) uses a special sheet of music (KrakenConfig) to coordinate various instruments (models, tokenizers), making sure they play together perfectly.

Features of Kraken

- Dynamic Model Routing: Automatically routes input to the most suitable language model based on characteristics.

- Multiple Language Models: Supports a variety of pre-trained Causal Language Models (CLMs) for flexibility.

- Customizable Templates: Allows formatting of input using predefined templates to adapt to different contexts.

- Extensible Configuration: Easy to customize for various casual language modeling use cases.

How to Load and Call the Kraken Model

Follow these steps to load and call the Kraken model:

from transformers import AutoModelForCausalLM

device = "cuda:0" # Setup cuda:0 if NVIDIA, mps if on Mac

# Load the model and configuration

model = AutoModelForCausalLM.from_pretrained('kraken_model', trust_remote_code=True)

# Call the Reasoning expert

messages = [

{"role": "system", "content": "You are a helpful AI Assistant."},

{"role": "user", "content": "Find the mass percentage of Ba in BaO"}

]

tokenizer = model.tokenizer

input_text = tokenizer.apply_chat_template(messages, tokenize=False)

input_ids = tokenizer(input_text, return_tensors='pt').input_ids.to(device)

output_ids = model.generate(input_ids, max_length=250)

print(tokenizer.decode(output_ids[0], skip_special_tokens=True))

Expert Calls

Now that you have loaded the Kraken model, let’s explore calling different experts:

# Call the Function Calling Expert

messages = [

{"role": "system", "content": "You are a helpful assistant with access to the following functions."},

{"role": "user", "content": "I need to calculate the area of a rectangle. The length is 5 and the width is 3."}

]

# Similar steps follow for other experts (Python, SQL, etc.)

Troubleshooting

If you encounter any issues while working with the Kraken model, consider the following troubleshooting tips:

- Ensure all dependencies, particularly the transformers library, are up-to-date.

- Verify that your device is correctly configured (e.g., CUDA for NVIDIA).

- Check your model and tokenizer initialization parameters; errors often stem from incorrect paths or arguments.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.