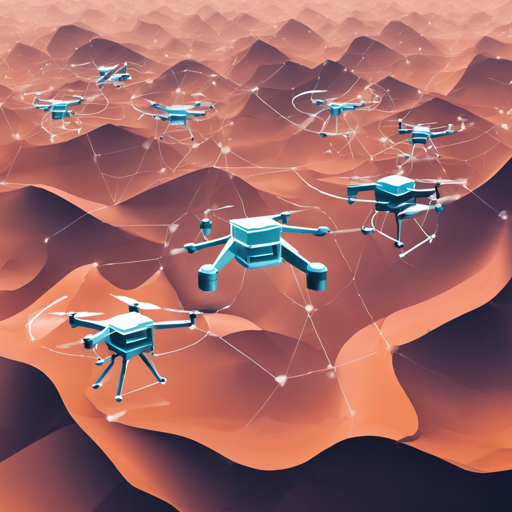

Welcome to our guide on improving the performance of UAV-assisted Mobile Edge Computing (MEC) systems! In this blog, we will explore how to effectively implement a Deep Deterministic Policy Gradient (DDPG) algorithm to optimize computation offloading by using Unmanned Aerial Vehicles (UAVs). This guide is designed to be user-friendly and will include troubleshooting tips throughout.

Understanding the Concept

Imagine you’re in a busy kitchen during dinner rush hour, trying to prepare multiple dishes at once. You, as the chef, can only handle a certain number of tasks at a time—each dish represents a computing task. Now, imagine that you have a sous-chef (the UAV) who can help by taking over some of the cooking. However, you need to decide how to split the tasks: some will remain on your plate (local execution), and others will be handed off to the sous-chef (task offloading).

In our UAV-assisted MEC system, the UAV (sous-chef) uses a computation offloading algorithm based on DDPG to determine the best way to manage tasks from various user equipment (UE) (kitchen orders). The goal is to minimize the overall processing time (reduce dinner service time), ensuring efficiency in task handling while adhering to constraints like energy consumption (the limits of your stove and oven).

Requirements

Before getting started, you’ll need the following:

- TensorFlow 1.X: Ensure you have this installed to run the DDPG algorithm effectively.

Implementing the DDPG Algorithm

The implementation process encapsulates various steps to set up the environment, establish state and action spaces, and utilize the DDPG algorithm to optimize the offloading task. Below is a brief overview of the high-level code structure:

# Step 1: Set up simulation environment

environment = UAVMECEnvironment()

# Step 2: Initialize DDPG agent

agent = DDPGAgent(state_dim, action_dim)

# Step 3: Training loop for the agent

for episode in range(num_episodes):

state = environment.reset()

done = False

while not done:

action = agent.select_action(state)

next_state, reward, done = environment.step(action)

agent.remember(state, action, reward, next_state, done)

agent.train()

state = next_state

In this example:

- Setting up the environment: Much like preparing your kitchen, this step involves defining the parameters of the UAV MEC system.

- Initializing the agent: This acts as your sous-chef, learning to take over tasks as needed.

- Training loop: The chef (you) and sous-chef undergo a dynamic interaction where the sous-chef learns to optimize task handling through rewards for efficiency.

Troubleshooting Tips

Even the best chefs face challenges in the kitchen! Here are some troubleshooting ideas to ensure your UAV offloading system runs smoothly:

- Issue: Slow convergence of the DDPG algorithm.

- Tip: Adjust hyperparameters such as learning rate and exploration strategy to enhance learning.

- Issue: High processing delay.

- Tip: Review the task offloading strategy to ensure an effective balance of local and UAV processing.

- General Queries: For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

By using DDPG for computation offloading in UAV-assisted MEC systems, you can significantly improve the overall processing delay and system efficiency. Just remember, mastery in task management, like cooking, requires practice and continuous refinement!

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Further Reading

If you’re interested in learning more about our research, click here for our paper online. For additional references on multi-agent systems, check out our work on MADDPG or implement DRL algorithms using Ray.