Monocular depth estimation is a fascinating area of research in computer vision, where we aim to obtain accurate depth information from a single image. In this guide, we’ll explore how to implement the method described in the paper High Quality Monocular Depth Estimation via Transfer Learning by Ibraheem Alhashim and Peter Wonka. This article will help you set up your environment, run the training, and evaluate the results.

Requirements

- Keras 2.2.4

- TensorFlow 1.13

- CUDA 10.0

- An NVIDIA Titan V or comparable GPU

- Operating systems: Windows 10 or Ubuntu 16

- Additional Packages: keras, pillow, matplotlib, scikit-learn, scikit-image, opencv-python, pydot, GraphViz, PyGLM, PySide2, pyopengl

You’re equipped with software, but make sure your hardware matches the requirements, as training may be intensive. Minimum hardware tested includes NVIDIA GeForce 940MX (laptop) or NVIDIA GeForce GTX 950 (desktop).

Getting Started

First, you need to download the pre-trained models. You can find them here:

Performing Depth Estimation

Now that you have the required setups and models, let’s dive into running the code.

1. Run Inference

To see the depth estimation in action, run:

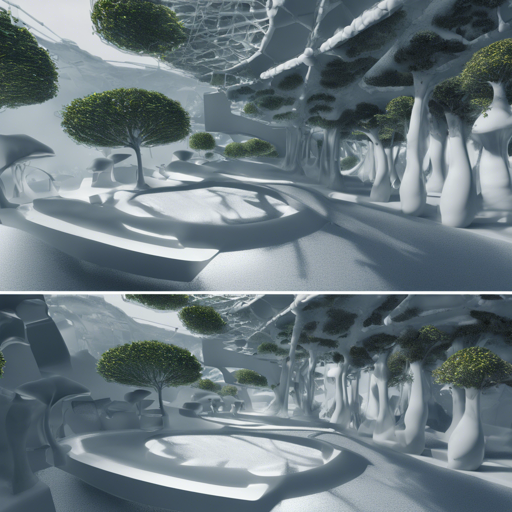

python test.pyOnce executed, you should see a montage of images alongside their estimated depth maps.

2. Running the 3D Demo

Experience your data in a whole new way with the 3D demo:

python demo.pyThis requires the packages PyGLM, PySide2, and pyopengl.

Training Your Model

If you want to train the model from scratch, simply run:

python train.py --data nyu --gpus 4 --bs 8Training can take around 24 hours on a single NVIDIA TITAN RTX GPU with a batch size of 8.

Evaluation Process

To evaluate your model, first download the ground truth test data from here. Do not extract it. Then execute:

python evaluate.pyUnderstanding the Code with an Analogy

Think of the depth estimation code like a well-organized recipe for baking a cake:

- Ingredients: The required packages and pre-trained models act as ingredients. Each has its role in achieving the perfect depth map.

- Preparation: The training process is akin to mixing the batter. You need patience and the right tools (your GPU) to create a harmonious blend.

- Baking: When you run the training and evaluation scripts, it’s like putting your cake into the oven and waiting for it to rise. The results will yield a beautiful cake—or in this case, a depth map!

Troubleshooting

If you run into issues, consider the following troubleshooting tips:

- Ensure all dependencies and packages are installed correctly. Use

pip installto install any missing packages. - If you experience performance issues, check that your GPU drivers are up to date. Outdated drivers can cause slow processing.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

By following this guide, you should now have a solid foundation in monocular depth estimation using the latest methods available. You can explore further by experimenting with different datasets and adjusting the training parameters for better performance.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.