Welcome to the comprehensive guide on setting up the OD-Database, a web-crawling project designed to index a plethora of file links and their essential metadata from open directories. Whether you’re diving into the world of web crawling or enhancing your research capabilities, this guide will walk you through the installation and usage of this powerful tool.

What is OD-Database?

OD-Database is an ingenious solution that indexes millions of files from misconfigured servers and public service mirrors. With staggering capabilities, a single crawler instance can fetch thousands of tasks, crawl hundreds of websites concurrently (both FTP and HTTP(S)), and is adept at ingesting thousands of new documents per second into Elasticsearch. Currently, it hosts about 1.93 billion indexed files, totaling a significant amount of raw data.

Getting Started with Installation

To get started with OD-Database, you need to follow these simple steps:

1. Clone the Repository

Open your terminal and run the following command:

bash

git clone --recursive https://github.com/simon987/od-database

2. Navigate into the Directory

Change into the OD-Database directory:

cd od-database

3. Create Necessary Directories

Create the required directories to store database and Elasticsearch data:

mkdir oddb_pg_data tt_pg_data es_data wsb_data

4. Start the Docker Service

Finally, run the following command to start the service:

docker-compose up

Understanding the Architecture

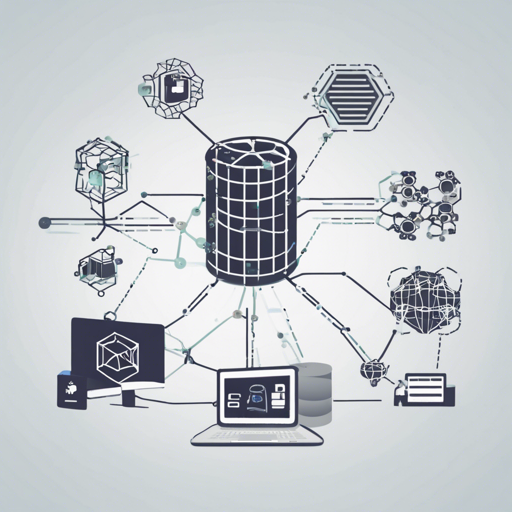

Envision the OD-Database as a well-oiled machine. The central server acts as the brain, dispatching tasks to various crawler instances—like worker bees—mimicking the busy hive. Each worker fetches tasks, crawls websites, and sends back results, which are subsequently indexed for quick access. This is crucial for managing vast amounts of data efficiently, making it easier to find and serve requests.

Running the Crawl Server

The original Python crawler has been discontinued, and it is recommended to use the newer Go implementation of the crawler, which you can find here.

Troubleshooting Tips

If you encounter any issues, consider the following troubleshooting steps:

- Ensure Docker is installed and running correctly on your system.

- Double-check that all directories were created successfully.

- Look at the logs from your Docker services for any errors that might have occurred during the up process.

- If you require further assistance, for more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Conclusion

By following this guide, you should have a functional instance of the OD-Database up and running. Happy crawling!