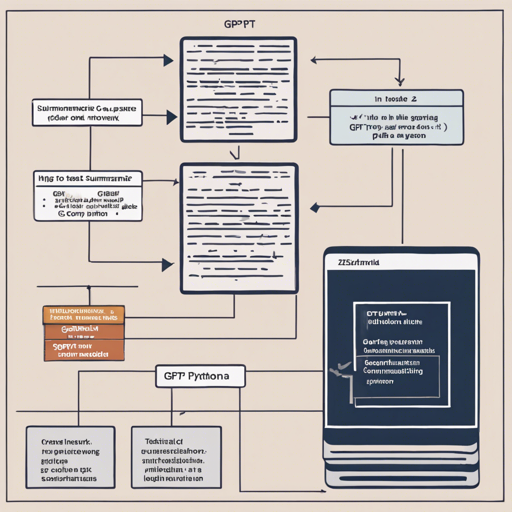

In today’s fast-paced world, the ability to distill information into succinct summaries is invaluable. With artificial intelligence and language processing, we can leverage models like GPT-2 to summarize text effectively. This guide will walk you through the process of summarizing using the GPT-2 model and troubleshooting common issues along the way.

Getting Started

Before diving into the code, ensure you have an appropriate Python environment set up and the required libraries installed. Specifically, you’ll need the transformers library that houses the GPT-2 model.

Loading the Model

To load the model based on the GPT-2 architecture, you’ll use the following code:

from transformers import GPT2LMHeadModel, GPT2TokenizerFast

model = GPT2LMHeadModel.from_pretrained("philippelab/ansummary_loop10")

tokenizer = GPT2TokenizerFast.from_pretrained("philippelab/ansummary_loop10")

Inputting Your Document

Now that the model is loaded, you need to provide the text you want to summarize. Here’s an example document about rockfalls on Mars:

document = """Bouncing Boulders Point to Quakes on Mars.

A preponderance of boulder tracks on the red planet may be evidence of recent seismic activity.

If a rock falls on Mars, and no one is there to see it, does it leave a trace? Yes, and it's a beautiful herringbone-like pattern, new research reveals."""

Tokenizing Your Document

Next, you need to tokenize the document—that is, convert it into a format suitable for the model:

tokenized_document = tokenizer([document], max_length=300, truncation=True, return_tensors='pt')['input_ids'].cuda()

Generating the Summary

With your document tokenized, you’re ready to generate a summary. Here’s the code you’ll use:

input_shape = tokenized_document.shape

outputs = model.generate(tokenized_document, do_sample=False, max_length=500, num_beams=4, num_return_sequences=4, no_repeat_ngram_size=6, return_dict_in_generate=True, output_scores=True)

candidate_sequences = outputs.sequences[:, input_shape[1]:] # Remove the encoded text, keep only the summary

candidate_scores = outputs.sequences_scores.tolist()

for candidate_tokens, score in zip(candidate_sequences, candidate_scores):

summary = tokenizer.decode(candidate_tokens)

print(f'[Score: {score:.3f}] {summary[:summary.index("")]}')

Understanding the Code Using an Analogy

Think of the process of summarizing a document like preparing a dish in a kitchen. First, you gather your ingredients (the document text), then you chop them into manageable pieces (tokenization). Next, you combine and cook these ingredients together (model generation) to create a dish that is both flavorful yet simpler than the original ingredients (the summary). Just like cooking, each step is crucial to achieve a delicious outcome!

Example Output

After running the summary generation code, the output may look something like this:

[Score: -0.084] Heres what you need to know about rockfalls

[Score: -0.087] Heres what you need to know about these tracks

[Score: -0.091] Heres what we know so far about these tracks

[Score: -0.101] Heres what you need to know about rockfall

Troubleshooting Common Issues

If you encounter issues during this process, here are a few troubleshooting tips:

- Ensure that you have the correct version of the

transformerslibrary installed. - Check if your GPU is properly configured, especially if using

.cuda(). - If the model does not load, verify that the model name is correct.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Leveraging AI for summarizing text can save time, helping you focus on more critical tasks. Happy summarizing!

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Further Information

If you wish to delve deeper into more functionalities, check out the Github repository for access to the scoring function, training scripts, and examples.