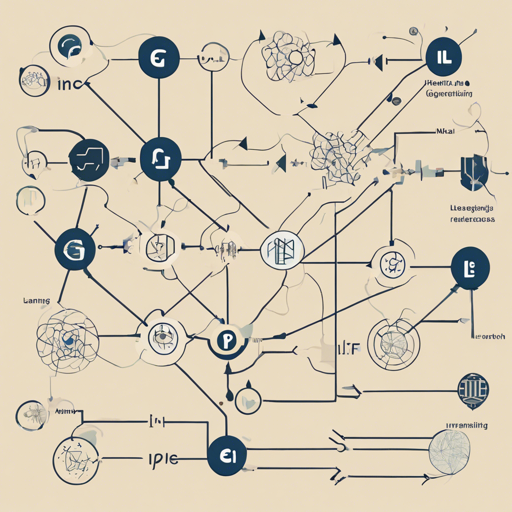

Natural Language Inference (NLI) is a fascinating area within natural language processing (NLP) where the goal is to determine the relationship between a premise and a hypothesis. Generalization in this context is crucial, as it helps models go beyond simple heuristics that work on particular datasets but fail in diverse scenarios. In this article, we will explore the concept of generalization in NLI, guided by insights from the paper titled Generalization in NLI: Ways (Not) To Go Beyond Simple Heuristics by Prajjwal Bhargava, Aleksandr Drozd, and Anna Rogers.

Understanding Generalization

Think of generalization in NLI like teaching a dog to fetch. If you only throw a ball in the backyard, your dog may get great at fetching it from that one spot. However, if you take it to a park and throw the ball in different directions, will it retrieve it just as well? Generalization is about ensuring that your dog (or in this case, the NLI model) can understand and react correctly regardless of the situation, not just based on past experiences. This is critical for robust AI solutions.

Key Points from the Paper

- Avoiding Overfitting: One major concern highlighted in the paper is overfitting to training data, which can lead to poor performance on unseen data.

- Heuristic Limitations: Relying solely on simple heuristics can limit a model’s effectiveness. The authors emphasize that more sophisticated approaches are necessary for better generalization.

- Data Diversity: Using diverse datasets for training can significantly improve the model’s ability to generalize across different contexts.

Practical Steps to Improve Generalization in NLI Models

- Diverse Datasets: Ensure that your training dataset includes a wide variety of examples. This can prevent your model from being biased towards any specific type of data.

- Regularization Techniques: Implement techniques such as dropout or L2 regularization to avoid overfitting.

- Continuous Learning: Incorporate mechanisms for the model to learn and adapt to new data over time, improving its capability to generalize.

Troubleshooting Common Issues

If you encounter challenges while implementing NLI models, consider these troubleshooting ideas:

- Model Performance: If your model performs well on the training data but poorly on validation data, it may be overfitting. Review your training regime and consider using regularization techniques.

- Data Imbalance: If your datasets are imbalanced, consider techniques like re-sampling or data augmentation to provide a more balanced input.

- Complexity vs. Interpretability: If your model is too complex, it may be difficult to interpret. Aim for a balance between model complexity and its ability to generalize.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

Generalization in NLI is a vital aspect of developing effective AI models. By understanding how to avoid common pitfalls and by learning from existing research, we can enhance the performance of our models. Whether you are a seasoned practitioner or a newcomer to the field, focusing on generalization can help you achieve better results in natural language tasks.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.