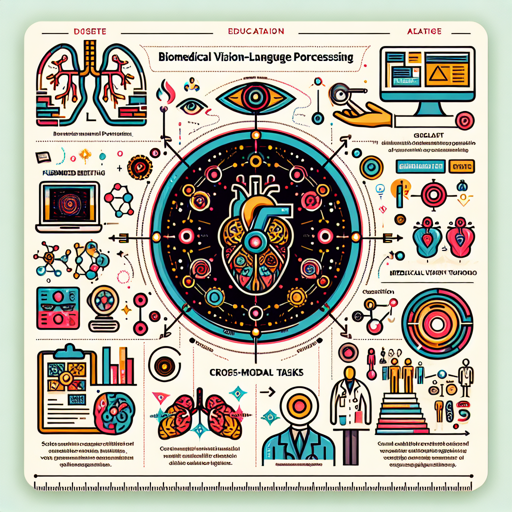

In the ever-evolving field of biomedical research, effective communication between images and text is crucial. BiomedCLIP, powered by the combination of PubMedBERT and a Vision Transformer, is designed to bridge this gap. Today, we’re diving into a user-friendly guide on how to utilize this powerful model for your biomedical vision-language tasks.

What is BiomedCLIP?

BiomedCLIP is a state-of-the-art biomedical vision-language foundation model trained on the PMC-15M dataset, which consists of 15 million figure-caption pairs from PubMed Central. Utilizing advanced contrastive learning, this model excels in multiple tasks such as cross-modal retrieval, image classification, and visual question answering.

Getting Started with BiomedCLIP

Using BiomedCLIP for biomedical image classification is easier than you might think! Here’s a structured approach:

- Step 1: Access the model via the [example notebook](https://aka.ms/biomedclip-example-notebook) that provides an in-depth walkthrough.

- Step 2: Prepare your images. For instance, you might have images like:

- Step 3: Assign candidate labels to your images based on what you’re analyzing, such as “adenocarcinoma histopathology” or “normal chest x-ray”.

- Step 4: Run the model and assess the results!

Understanding the Model with an Analogy

Think of BiomedCLIP as a skilled translator at a multicultural conference. In this scenario, the images are the visual presentations and text is the accompanying explanation in a different language. BiomedCLIP listens to the image (visual) presenter and the text (linguistic) speaker at the same time, piecing together their messages to provide viewers with insightful summaries and classifications. Just like how the translator ensures that both parties understand each other, BiomedCLIP ensures that images and texts in the biomedical domain communicate effectively!

Troubleshooting Tips

If you encounter any issues during your workflow, consider the following troubleshooting ideas:

- Issue 1: Model not running? Ensure you have the appropriate environment set up as per the requirements outlined in the example notebook.

- Issue 2: Poor classification results? Double-check that your images and their labels align correctly.

- Issue 3: Conflicting outputs? Restart the runtime and clear your previous outputs, they may be causing inconsistencies.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.