Welcome to the exciting world of AI where images meet advanced language processing! Today, we’ll explore how to use the BLIP-2 model integrated with Flan T5-xxl, opening up new avenues for image captioning and visual question answering. We will break down the process step-by-step for clarity and provide troubleshooting tips to ensure a smooth experience!

Understanding BLIP-2

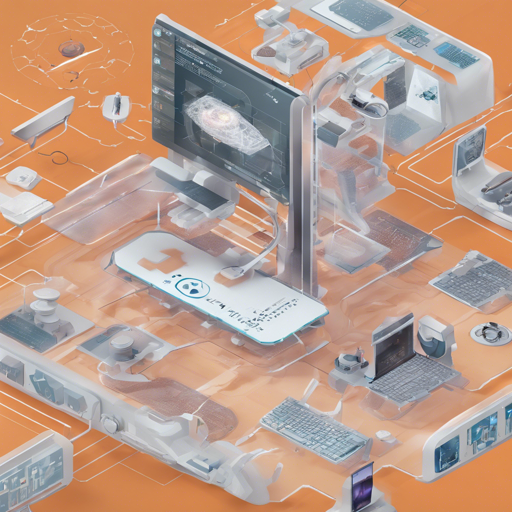

BLIP-2 is a powerful model that consists of three key components: a CLIP-like image encoder, a Querying Transformer (Q-Former), and a large language model. To help you visualize this:

- Image Encoder: Think of it as a digital camera that captures and understands the content of images.

- Querying Transformer: Consider this as your intelligent assistant that processes questions and connects the dots between images and language.

- Large Language Model (Flan T5-xxl): Imagine a library filled with knowledge, ready to provide answers based on what it reads from the images and queries.

These components work together to allow the model to generate text that describes images or answers questions about them.

Step-by-Step Guide to Run BLIP-2

Let’s get you started with using BLIP-2! You can run the model on either CPU or GPU. Here’s how:

Running the Model on CPU

python

import requests

from PIL import Image

from transformers import BlipProcessor, Blip2ForConditionalGeneration

processor = BlipProcessor.from_pretrained("Salesforce/blip2-flan-t5-xxl")

model = Blip2ForConditionalGeneration.from_pretrained("Salesforce/blip2-flan-t5-xxl")

img_url = "https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg"

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert("RGB")

question = "how many dogs are in the picture?"

inputs = processor(raw_image, question, return_tensors="pt")

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True))Running the Model on GPU

For better performance, you might want to run it on a GPU. Here are different configurations:

Full Precision

python

# pip install accelerate

import requests

from PIL import Image

from transformers import BlipProcessor, Blip2ForConditionalGeneration

processor = BlipProcessor.from_pretrained("Salesforce/blip2-flan-t5-xxl")

model = Blip2ForConditionalGeneration.from_pretrained("Salesforce/blip2-flan-t5-xxl", device_map="auto")

img_url = "https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg"

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert("RGB")

question = "how many dogs are in the picture?"

inputs = processor(raw_image, question, return_tensors="pt").to("cuda")

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True))Half Precision (float16)

python

# pip install accelerate

import torch

import requests

from PIL import Image

from transformers import BlipProcessor, Blip2ForConditionalGeneration

processor = BlipProcessor.from_pretrained("Salesforce/blip2-flan-t5-xxl")

model = Blip2ForConditionalGeneration.from_pretrained("Salesforce/blip2-flan-t5-xxl", torch_dtype=torch.float16, device_map="auto")

img_url = "https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg"

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert("RGB")

question = "how many dogs are in the picture?"

inputs = processor(raw_image, question, return_tensors="pt").to("cuda", torch.float16)

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True))8-bit Precision (int8)

python

# pip install accelerate bitsandbytes

import torch

import requests

from PIL import Image

from transformers import BlipProcessor, Blip2ForConditionalGeneration

processor = BlipProcessor.from_pretrained("Salesforce/blip2-flan-t5-xxl")

model = Blip2ForConditionalGeneration.from_pretrained("Salesforce/blip2-flan-t5-xxl", load_in_8bit=True, device_map="auto")

img_url = "https://storage.googleapis.com/sfr-vision-language-research/BLIP/demo.jpg"

raw_image = Image.open(requests.get(img_url, stream=True).raw).convert("RGB")

question = "how many dogs are in the picture?"

inputs = processor(raw_image, question, return_tensors="pt").to("cuda", torch.float16)

out = model.generate(**inputs)

print(processor.decode(out[0], skip_special_tokens=True))Troubleshooting Tips

While you’re enjoying your journey with the BLIP-2 model, you might run into a few hiccups. Here are some troubleshooting ideas:

- Model Not Found: Ensure that you have the correct model name and that you are connected to the internet.

- CUDA Errors: Make sure you have the correct NVIDIA drivers installed for GPU support.

- Image Not Loading: Double-check the URL to see if it is accessible, or try using a local image file.

- Installation Issues: Ensure you have installed necessary libraries like

transformersandPILcorrectly.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Ethical Considerations

As with any powerful tool, it is essential to consider ethical implications. BLIP-2 inherits biases from the datasets it was trained on, and this might lead to generating inappropriate content. Always assess the model’s safety and fairness in your specific application context before deployment.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.