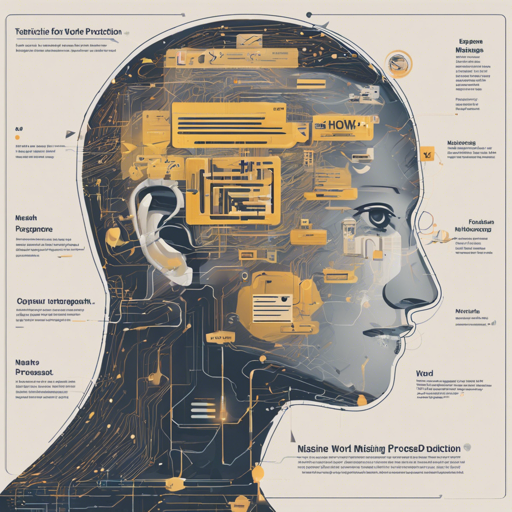

FongBERT is a pioneering BERT model specifically trained on sentences in the Fon language, opening doors for transfer learning on downstream tasks. In this article, we will walk you through the process of using FongBERT to predict missing words in sentences with practical examples.

Getting Started with FongBERT

In order to utilize FongBERT, you’ll need to install the Hugging Face Transformers library if you haven’t done so already. You can do this using the following command:

pip install transformersThen, you’ll import the necessary components from the library. To help visualize the process, think of FongBERT as a wise librarian. The tokenizer organizes the books (your sentences), and the model acts as the librarian who fills in the gaps to help you understand the context better.

Using FongBERT in Your Code

Here’s how to set up FongBERT and use it for masked word prediction:

from transformers import AutoTokenizer, AutoModelForMaskedLM

from transformers import pipeline

tokenizer = AutoTokenizer.from_pretrained("GillesFongBERT")

model = AutoModelForMaskedLM.from_pretrained("GillesFongBERT")

fill = pipeline("fill-mask", model=model, tokenizer=tokenizer)In the analogy above, first, you tell the librarian (model) what books you need (the sentences), and then you ask them to fill in missing words (predict). The pipeline acts like a librarian’s assistant, efficiently handling the requests.

Examples of Missing Word Prediction

Let’s take a look at some examples of how FongBERT handles missing word prediction.

Example 1

Original Sentence: un tuùn ɖɔ un jló na wazɔ̌ nú we .

Translation: I know I have to work for you.

Masked Sentence: un tuùn ɖɔ un jló na wazɔ̌ mask we .

Translation: I know I have to work mask you.

fill("un tuùn ɖɔ un jló na wazɔ̌ [MASK] we")Example 2

Original Sentence: un yi wan nu we ɖesu .

Translation: I love you so much.

Masked Sentence: un yi mask nu we ɖesu .

Translation: I mask you so much.

fill("un yi [MASK] nu we ɖesu")Example 3

Original Sentence: un yì cí sunnu xɔ́ntɔn ce Tony gɔ́n nú táan ɖé .

Translation: I went to my boyfriend for a while.

Masked Sentence: un yì cí sunnu xɔ́ntɔn ce Tony gɔ́n nú mask ɖé .

Translation: I went to my boyfriend for a mask.

fill("un yì cí sunnu xɔ́ntɔn ce Tony gɔ́n nú [MASK] ɖé")Troubleshooting Tips

If you encounter issues during installation or execution, consider the following troubleshooting ideas:

- Ensure you have the latest version of the Transformers library.

- Validate your Python environment; some dependencies might require specific versions.

- Check for typos in your model name (“GillesFongBERT”).

- If you get an error relating to the fill-mask pipeline, confirm your internet connection as it may need to download model weights.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Final Thoughts

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.