If you’ve ever wondered how to animate facial expressions from a single image, you’re in for a treat! This guide will walk you through the process of using GANimation, a novel framework that uses Action Units (AU) to create anatomically-aware facial animations. This technology can transform static images into dynamic portraits, making it perfect for a range of applications—from gaming to film production. Let’s dive into the process step-by-step.

Understanding GANimation

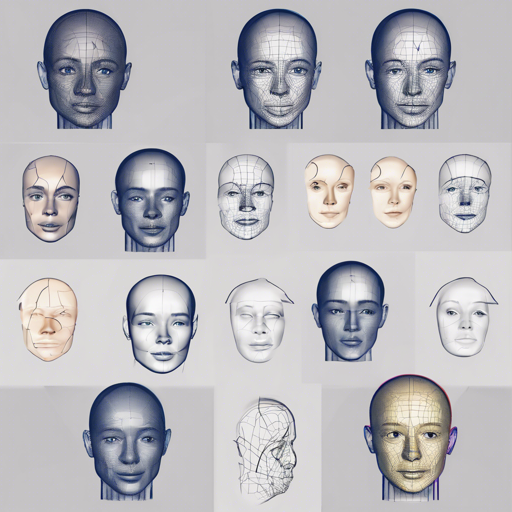

Think of GANimation as a skilled artist who can paint facial expressions using just a single snapshot. This artist has a complete understanding of the underlying structure of facial anatomy (this is where the Action Units come in). Instead of guessing how to animate expressions, GANimation uses these anatomical markers to ensure that each animated expression is both realistic and consistent with human biology. With this system, you can control how much of each facial expression you want to highlight, allowing you to create nuanced animations.

Prerequisites

Before getting started, ensure that you have the following set up:

- Install PyTorch (version 0.3.1)

- Install Torch Vision and other dependencies

- Run the following command to install additional requirements:

pip install -r requirements.txt

Data Preparation

GANimation requires a specific directory structure to work. Ensure you have the following files organized neatly:

- imgs: A folder containing all your input images.

- aus_openface.pkl: A dictionary file that holds Action Units for your images.

- train_ids.csv: A file listing the images you will use for training.

- test_ids.csv: A file listing the images you will use for testing.

To create the required aus_openface.pkl, you’ll need to run the OpenFace tool to extract Action Units. Follow these steps:

- Download and install OpenFace.

- Extract Action Units from each image, storing results in a CSV file that matches the image name.

- Next, run the following command to prepare your data:

python dataprepare_au_annotations.py

Running GANimation

Once your data is ready, you can begin the training and testing processes.

- To train the model, execute:

bash launchrun_train.sh - To test it, use the following command, replacing

pathtoimgwith the path to your image:python test --input_path pathtoimg

Troubleshooting

If you run into any issues, here are some common troubleshooting steps:

- Ensure you are using the correct Python and PyTorch versions as mentioned in the prerequisites.

- Check your directory structure matches what is outlined; a missing file can interrupt the process.

- Make sure all dependencies are correctly installed per the requirements.txt file.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

GANimation opens doors to innovative applications in facial animation, providing an exciting intersection of AI and creativity. Remember to handle this technology responsibly and ethically, as noted in the official implementation guidelines. At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.