If you’re looking to leverage the power of the GLM-4-9B-Chat model for your projects, you’re in the right place! This guide will walk you through the steps of using this advanced pre-trained model developed by Zhipu AI and will provide insights into its unique capabilities. We’ll also explore some common troubleshooting techniques to assist you along the way.

Model Overview

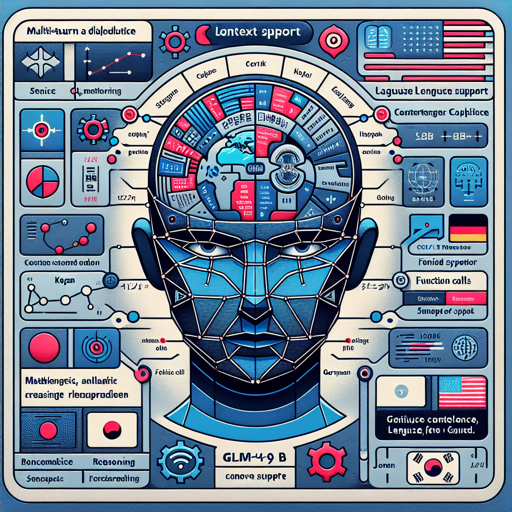

The GLM-4-9B model is the latest open-source version in the GLM-4 series. It excels in various assessments related to semantics, mathematics, reasoning, coding, and knowledge. Notably, the GLM-4-9B-Chat version can conduct multi-turn dialogues, browse the web, execute code, and call customized tools (Function Call). This version supports advanced long-text reasoning with maximum context lengths of up to 128K and has multi-language capabilities, supporting 26 languages including Japanese, Korean, and German.

Model Evaluation Results

The GLM-4-9B-Chat model has been rigorously evaluated on classic tasks, showcasing promising results across multiple benchmarks. Here’s a snapshot:

| Model | AlignBench-v2 | MT-Bench | IFEval | MMLU | C-Eval | GSM8K | MATH | HumanEval | NCB |

|:--------------------|:-------------:|:--------:|:------:|:----:|:------:|:-----:|:----:|:---------:|:----:|

| Llama-3-8B-Instruct | 5.12 | 8.00 | 68.58 | 68.4 | 51.3 | 79.6 | 30.0 | 62.2 | 24.7 |

| ChatGLM3-6B | 3.97 | 5.50 | 28.1 | 66.4 | 69.0 | 72.3 | 25.7 | 58.5 | 11.3 |

| GLM-4-9B-Chat | 6.61 | 8.35 | 69.0 | 72.4 | 75.6 | 79.6 | 50.6 | 71.8 | 32.2 |Implementing Long Text Processing

When using the GLM-4-9B-Chat model, think of it like a vast library where each book represents a specific context or topic. Just as a librarian can quickly fetch any book based on your request, GLM-4-9B-Chat can handle queries with long contexts up to 1 million tokens. To illustrate, if you’re searching for a very specific piece of information tucked away in a 1,000-page book, you’ll want to provide the model with enough detail in your query so it can find that exact paragraph. The library here is the model’s memory which efficiently retrieves knowledge from its vast training data.

Running the Model

For practical implementation, follow these steps to use the model with either the Transformers or vLLM backend.

Using the Transformers Backend

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

device = "cuda"

tokenizer = AutoTokenizer.from_pretrained("THUDM/glm-4-9b-chat", trust_remote_code=True)

query = "你好"

inputs = tokenizer.apply_chat_template([{"role": "user", "content": query}],

add_generation_prompt=True,

tokenize=True,

return_tensors="pt",

return_dict=True

)

inputs = inputs.to(device)

model = AutoModelForCausalLM.from_pretrained(

"THUDM/glm-4-9b-chat",

torch_dtype=torch.bfloat16,

low_cpu_mem_usage=True,

trust_remote_code=True).to(device).eval()

gen_kwargs = {"max_length": 2500, "do_sample": True, "top_k": 1}

with torch.no_grad():

outputs = model.generate(**inputs, **gen_kwargs)

outputs = outputs[:, inputs['input_ids'].shape[1]:]

print(tokenizer.decode(outputs[0], skip_special_tokens=True))Using the vLLM Backend

from transformers import AutoTokenizer

from vllm import LLM, SamplingParams

max_model_len, tp_size = 131072, 1

model_name = "THUDM/glm-4-9b-chat"

prompt = [{"role": "user", "content": "你好"}]

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

llm = LLM(

model=model_name,

tensor_parallel_size=tp_size,

max_model_len=max_model_len,

trust_remote_code=True,

enforce_eager=True,

)

stop_token_ids = [151329, 151336, 151338]

sampling_params = SamplingParams(temperature=0.95, max_tokens=1024, stop_token_ids=stop_token_ids)

inputs = tokenizer.apply_chat_template(prompt, tokenize=False, add_generation_prompt=True)

outputs = llm.generate(prompts=inputs, sampling_params=sampling_params)

print(outputs[0].outputs[0].text)Troubleshooting

While running the model, you may encounter some issues. Here are a few common problems and solutions:

- Out of Memory (OOM) Errors: If you experience OOM errors, consider reducing the `max_model_len` or increasing the `tensor_parallel_size`.

- Dependencies Not Installed: Ensure you are following the dependency requirements specified in the requirements file. Missing dependencies can lead to runtime errors.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

GLM-4-9B-Chat is a powerful tool for engaging with text in a meaningful way. Its long context handling and multi-language support open up exciting possibilities for any application. So, dive into the world of AI and tap into the capabilities of GLM-4-9B-Chat!

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.