Hand-object pose estimation has become a vibrant area of research within computer vision, enabling real-time, robust interactions between humans and objects in various applications. The HOPE-Net model provides a powerful framework that utilizes graph-based architectures to estimate the poses of hands and the objects they manipulate. In this article, we’ll explore how to set up and run HOPE-Net effectively, step by step.

Understanding HOPE-Net Architecture

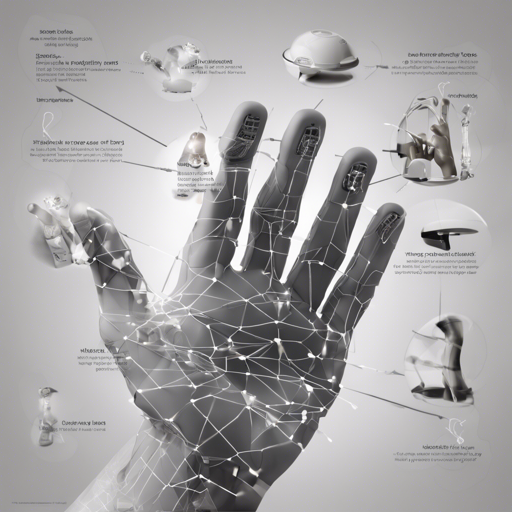

The architecture of HOPE-Net is designed to leverage intricate relationships between hand and object poses. Imagine constructing a treehouse (the model) using strong branches (graph convolution) to support the main structure (hand and object poses). Here’s a breakdown of how HOPE-Net operates:

- Image Encoding: The model starts with ResNet, serving as an image encoder to predict initial 2D coordinates for joints and object vertices.

- Graph Features: The predicted coordinates are combined with image features, forming the input graph for a three-layered graph convolution.

- 2D to 3D Transformation: The resulting 2D coordinates are transformed into 3D coordinates through an Adaptive Graph U-Net, preserving spatial hierarchies.

Setting Up HOPE-Net

To get started with HOPE-Net, follow these steps:

1. Download Required Datasets

You’ll need to download the datasets used in the paper. Fetch the following:

After downloading, ensure to update the root path in the make_data.py file located within each dataset folder. Then, run the make_data.py files to generate the .npy files required for the model.

2. Test Pretrained Model

Start by ensuring that you have generated the .npy files from the First-Person Hand Action Dataset. Follow these steps to download the pretrained model:

For GraphUNet:

wget http://vision.soic.indiana.edu/wp/wp-content/uploads/graphunet.tar.gz

tar -xvf graphunet.tar.gz

python Graph.py --input_file .datasetsfhad --test --batch_size 64 --model_def GraphUNet --gpu --gpu_number 0 --pretrained_model .checkpointsgraphunetmodel-0.pklFor HOPE-Net:

wget http://vision.soic.indiana.edu/hopenet_files/checkpoints.tar.gz

tar -xvf checkpoints.tar.gz

python HOPE.py --input_file .datasetsfhad --test --batch_size 64 --model_def HopeNet --gpu --gpu_number 0 --pretrained_model .checkpointsfhadmodel-0.pklTroubleshooting Common Issues

While setting up and running HOPE-Net, you may encounter some common issues. Here are troubleshooting tips to keep you on track:

- Error while downloading datasets: Make sure that the URLs are correct and accessible. If you face network issues, try using a different network or checking your firewall settings.

- Missing .npy files: Double-check that you have run the

make_data.pyfiles in the corresponding dataset folders correctly. - Pretrained model not found: Verify the extraction paths after using the

tarcommand. Make sure that the files are correctly located as stated in your command. - GPU allocation issues: Ensure that CUDA is properly installed and that the GPU number specified in your command is available.

- If issues persist, for more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations. HOPE-Net exemplifies the potential of combining traditional and modern approaches to solve complex problems, like hand-object pose estimation.