Welcome to the world of IBM’s Foundation Models for Materials! This guide will help you navigate the process of utilizing the SMI-TED (SMILES-based Transformer Encoder-Decoder) model for materials science and chemistry research.

Introduction

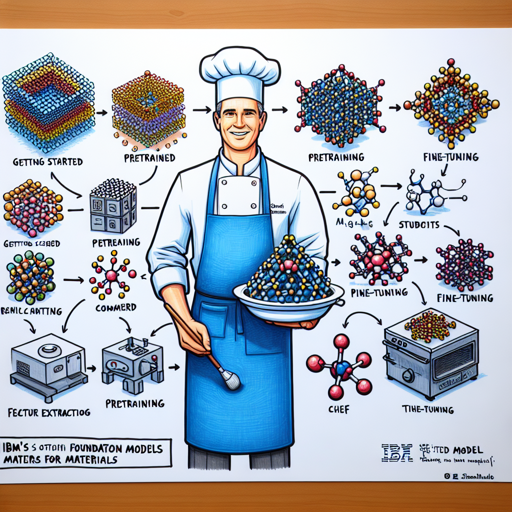

The SMI-TED model is a large encoder-decoder chemical foundation model pre-trained on 91 million SMILES samples. Think of this model like a chef who has practiced thousands of recipes. Just as a chef learns patterns and techniques to create various dishes, the SMI-TED understands molecular structures and can help predict their properties.

Table of Contents

Getting Started

This code and environment have been tested on Nvidia V100s and Nvidia A100s for optimal performance.

Pretrained Models and Training Logs

To start, you’ll need to download the pretrained models. You can find them in the following directory structure:

inference/

├── smi_ted_light

│ ├── smi_ted_light.pt

│ ├── bert_vocab_curated.txt

│ └── load.py

Depending on your task, you may also download the fine-tuning weights similarly, placing them in the appropriate directories.

Replicating Conda Environment

To replicate the environment, follow these steps:

- Create and Activate Conda Environment:

- Install Required Packages:

conda create --name smi-ted-env python=3.8.18

conda activate smi-ted-envconda install pytorch=1.13.1 cudatoolkit=11.4 -c pytorch

conda install numpy=1.23.5 pandas=2.0.3

conda install rdkit=2021.03.5 -c conda-forge

Pretraining

Pretraining involves training the encoder and decoder using specific strategies to develop the model’s understanding of chemical language. The analogy mentioned before serves here too: just as a chef follows recipes and experiments with flavors, the SMI-TED model learns from a vast dataset to refine its predictions. Follow these steps to start pretraining:

bash training/run_model_light_training.sh

You can adjust the command to train either just the encoder or both the encoder and decoder depending on your requirements.

Finetuning

Once your model is pretrained, it’s time to finetune it for your specific task. To do so, access the finetuning directory and execute the following command:

bash finetune/smi_ted_light/esol/run_finetune_esol.sh

This step will fine-tune the model based on specific datasets, readying it for real-world applications.

Feature Extraction

Feature extraction is where the magic happens! In this phase, the model encodes and decodes SMILES into embeddings and vice versa. To load the model and begin working with it, you can run:

model = load_smi_ted(

folder='../inference/smi_ted_light',

ckpt_filename='smi_ted_light.pt'

)

From there, you can encode SMILES into embeddings, or decode them back into SMILES strings:

with torch.no_grad():

encoded_embeddings = model.encode(df['SMILES'], return_torch=True)

with torch.no_grad():

decoded_smiles = model.decode(encoded_embeddings)

Troubleshooting

If you run into any issues while setting up or using the SMI-TED model, consider the following:

- Check Python and library versions: Ensure you are using the specified versions of Python and its libraries.

- Resource availability: Ensure you have access to the required GPU resources, as the model performance is optimized for Nvidia V100s and A100s.

- Refer to logs: Look into any error logs generated during the installation or model training phases.

- Community assistance: Reach out to the wider community for support or search for similar issues that others may have faced.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.