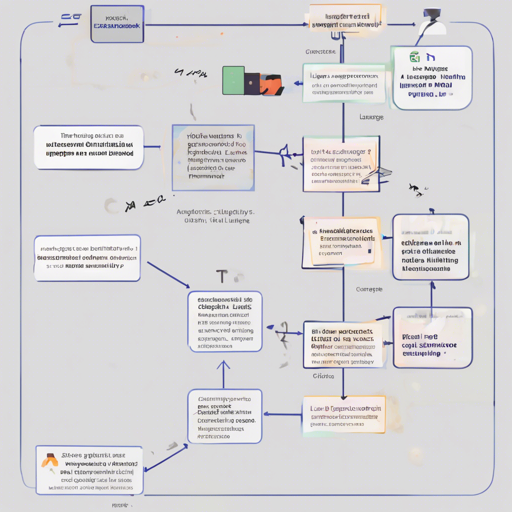

If you’re looking to dive into the world of extraction applications using LangChain, you’re in the right place! This guide will walk you through the process of setting up and using the LangChain Extract repository, which serves as a foundational tool for developing custom extraction applications.

What is LangChain Extract?

LangChain Extract is a simple web server that utilizes large language models (LLMs) to help you extract information from text and files. Built on the powerful FastAPI, this tool serves as a jumping-off point for your applications, allowing for flexibility and customization.

Getting Started

Before you can start using LangChain Extract, you’ll need to set it up. Here’s how to do it:

Step 1: Set Up Your Environment

- Check out the latest code from the releases page.

- Clone the repository to your local machine.

Step 2: Running Locally

The easiest way to get started is to use docker-compose to run the server. Follow these steps:

- Create a file named .local.env in the root directory with your OpenAI API key:

OPENAI_API_KEY=... # Your OpenAI API keydocker compose builddocker compose upAfter running the above commands, verify that the server is operational:

curl -X GET http://localhost:8000/readyYou should receive a response of “ok”. The UI will then be available at localhost:3000.

Step 3: Using the API

Now that your server is up and running, you can start extracting information. Here’s where it gets interesting:

Think of the API operations like a restaurant – you’re placing your order (sending a request), and the kitchen (the server) delivers your meal (response) based on your specifications (parameters and payload).

Creating an Extractor

First, you’ll want to create an extractor:

curl -X POST \

http://localhost:8000/extractors \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-H 'x-key: $USER_ID' \

-d '{

"name": "Personal Information",

"description": "Use to extract personal information",

"schema": {

"type": "object",

"title": "Person",

"required": ["name", "age"],

"properties": {

"age": {"type": "integer", "title": "Age"},

"name": {"type": "string", "title": "Name"}

}

},

"instruction": "Use information about the person from the given user input."

}'Extracting Information

Now, let’s use the extractor to get the information:

curl -s -X POST http://localhost:8000/extract \

-H 'accept: application/json' \

-H 'Content-Type: multipart/form-data' \

-H 'x-key: $USER_ID' \

-F 'extractor_id=e07f389f-3577-4e94-bd88-6b201d1b10b9' \

-F 'text=my name is chester and i am 20 years old.' \

-F 'mode=entire_document' \

-F 'file=' | jq .'Troubleshooting

If you encounter issues while using LangChain Extract, here are a few tips:

- Ensure that your Docker container is running properly and accessible at the correct ports.

- Verify your API key in the .local.env file. A missing or incorrect key can lead to errors.

- Check the server logs for any errors that may point you to what went wrong.

- Your user ID must be managed carefully; ensure it is exported correctly in your terminal.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.