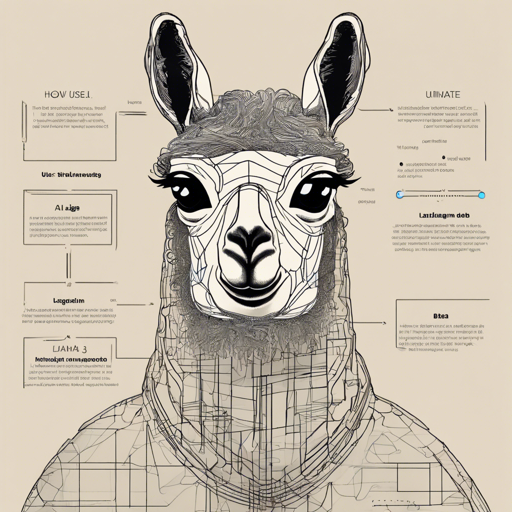

Welcome to the exciting world of AI development! With LLAMA-3_8B_Unaligned_BETA finally available, it’s time for you to dive in, explore its capabilities, and create something amazing. Here’s a step-by-step guide to help you get started using this powerful model.

Understanding LLAMA-3_8B_Unaligned_BETA

Think of LLAMA-3_8B_Unaligned_BETA as an ambitious artist with a passion for storytelling. Just like an artist needs a variety of materials to create a masterpiece, this model has been trained on approximately 50 million tokens, allowing it to generate captivating and coherent texts. However, as with any creative process, the journey may come with its share of challenges.

Step-by-Step Setup

- Step 1: Installation

Ensure you have the necessary environment set up to run this model. You’ll need to install the required libraries using Python. Here’s a basic example:

pip install transformers torchNow, it’s time to load the model. This is similar to selecting the right brush for your painting. Use the command provided below:

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("Sicarius/SicariiStuff/LLAMA-3_8B_Unaligned_BETA")

model = AutoModelForCausalLM.from_pretrained("Sicarius/SicariiStuff/LLAMA-3_8B_Unaligned_BETA")The magic happens here! Feed prompts to the model and let it create content. Think of it as whispering an idea to a poet, who then begins crafting verses in response:

input_text = "Write a thrilling story about adventure and bravery."

input_ids = tokenizer.encode(input_text, return_tensors='pt')

output = model.generate(input_ids, max_length=2048, num_return_sequences=1)Finally, examine the output. Just like enjoying the final strokes of a painting, you’ll see the creativity unfold:

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(generated_text)Troubleshooting Tips

Even the best artists encounter roadblocks. Here are some common issues and how to resolve them:

- Model Not Found Error: Ensure you have the correct model name. A missing or incorrect string can lead to this error.

- Environment Issues: Ensure your Python libraries are up to date. Run

pip install --upgrade transformers torchto refresh your packages. - Output Doesn’t Make Sense: Sometimes, the model’s creativity can lead to unexpected outputs. Adjust the prompt to be more specific, ensuring it guides the model better.

- Slow Response Time: This model is large, and processing may take time. Ensure your computing resources are sufficient and consider optimizing your environment.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Final Thoughts

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Now that you’re equipped with the essential steps and knowledge for using LLAMA-3_8B_Unaligned_BETA, don’t hesitate to unleash your creativity and see where your imagination takes you!