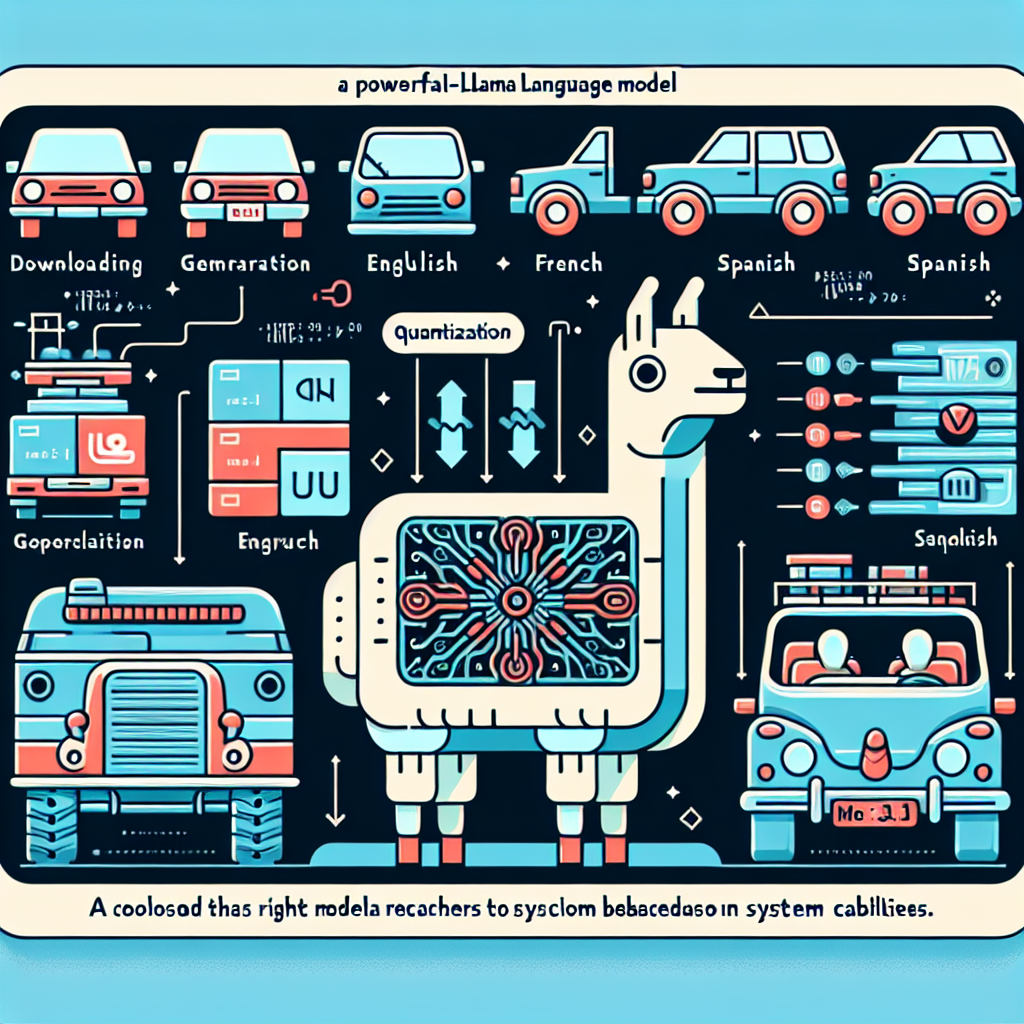

Meta-Llama 3.1 is a powerful language model developed by Meta that allows users to perform text generation tasks across various languages including English, French, and Spanish. Whether you’re an AI researcher, developer, or just curious, this guide will walk you through the essentials of using the model effectively.

Getting Started with Meta-Llama 3.1

To harness the capabilities of Meta-Llama 3.1, you’ll first need to download the appropriate model file. Depending on your system capabilities, different file sizes and quantization options are available.

Choosing the Right Quantization

Imagine you’re selecting a vehicle to travel. If you are planning a short trip, you might prefer a compact car. However, for a cross-country journey, an SUV with more capacity might be ideal. Similarly, when choosing a quantization option for Meta-Llama 3.1, you must consider your hardware specifications.

– Full Model (f32): Comparable to your spacious SUV, maximizing capabilities but consuming more resources.

– Higher Quality Quantization (from Q5 to Q8): These are great for those needing high-quality output without maxing out their resources. Think of these as hatchbacks—smaller but still comfortable.

– Lower Quality Quantization (Q3 and below): These are your compact cars, ideal for lower-end systems but with performance compromises.

Select the quant based on your system’s RAM and VRAM capacities, ensuring you choose a model that won’t exceed your memory limits.

Downloading the Model

You can download the model using the `huggingface-cli`. First, install it using the command:

pip install -U "huggingface_hub[cli]"

To download a specific file, use:

huggingface-cli download bartowski/Meta-Llama-3.1-8B-Instruct-GGUF --include "Meta-Llama-3.1-8B-Instruct-Q4_K_M.gguf" --local-dir ./

For larger models split into multiple files, run:

huggingface-cli download bartowski/Meta-Llama-3.1-8B-Instruct-GGUF --include "Meta-Llama-3.1-8B-Instruct-Q8_0.gguf/" --local-dir Meta-Llama-3.1-8B-Instruct-Q8_0

Making Your First Text Generation Request

Once you have your model downloaded, you’ll need to format the prompt you send to the model. The prompt format looks like this:

<|begin_of_text|><|start_header_id|>system<|end_header_id|>{system_prompt}<|eot_id|><|start_header_id|>user<|end_header_id|>{prompt}<|eot_id|><|start_header_id|>assistant<|end_header_id|>

This structured format helps the model to properly navigate and execute the text-generation task.

Troubleshooting Common Issues

As we journey through the world of AI, you might encounter a few bumps along the road. Here are some common issues and their fixes:

– Model Not Loading: Ensure that the file paths are correct and that the model files are fully downloaded.

– Insufficient Memory: If you’re running out of memory errors, consider using a lower quantization model or freeing up resources in your system.

– Unexpected Output: Check the prompt format for syntax errors and ensure that your prompt is clear and well-structured.

For more troubleshooting questions/issues, contact our fxis.ai data scientist expert team.

Conclusion

Using Meta-Llama 3.1 can feel like embarking on a grand road trip—ensuring you have the right vehicle (quantization) and maps (prompts) will lead to a smooth journey. With this guide, you’re well on your way to leveraging the capabilities of this impressive model for your text generation projects. Happy coding!