MultiBERTs is a powerful variation of the BERT model specifically designed to improve tasks in natural language processing (NLP). In this blog, we will guide you through the process of using the MultiBERTs Seed 0 Checkpoint in your projects. We will simplify the complex concepts and provide troubleshooting ideas to make your experience smooth.

What are MultiBERTs?

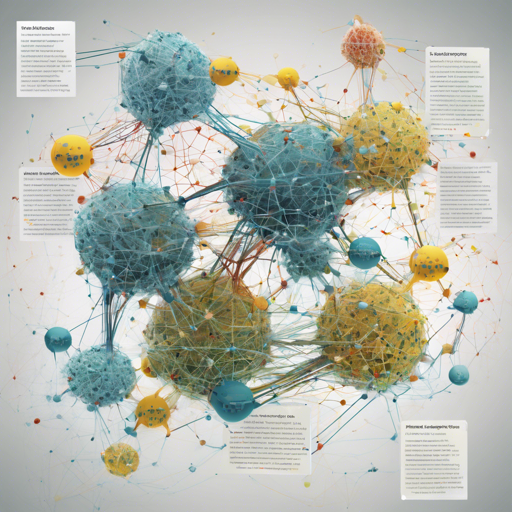

MultiBERTs are transformer models that have been pretrained on vast amounts of English text, allowing them to understand and generate human-like text based on the context. The training process includes two main objectives:

- Masked Language Modeling (MLM): A sentence with randomly masked words is fed into the model, which predicts the masked words based on the context from both sides.

- Next Sentence Prediction (NSP): The model examines pairs of sentences to determine if they follow each other, learning relationships between statements.

How to Use MultiBERTs

To leverage the MultiBERTs Seed 0 model, you need to follow these steps. We’ll make it easy by comparing it to the process of baking a cake — each ingredient and step is important for a successful outcome!

Step-by-Step Guide

- **Install Required Libraries**: Ensure you have the `transformers` library installed. You can do this via pip:

- **Import the Necessary Packages**: Just like you need flour and sugar to bake, you need proper imports for your model:

- **Load the Pre-trained Model**: Think of this step as preheating your oven to get it ready for baking:

- **Prepare Your Text**: Just like measuring ingredients carefully, you should prepare the text you wish to analyze:

- **Tokenize the Text**: Turn your prepared text into input that the model can understand:

- **Get Model Outputs**: Finally, run your input through the model to get the features, akin to taking your cake out of the oven:

pip install transformersfrom transformers import BertTokenizer, BertModeltokenizer = BertTokenizer.from_pretrained('multiberts-seed-0-1700k')text = "Replace me by any text you'd like."encoded_input = tokenizer(text, return_tensors='pt')output = model(**encoded_input)Troubleshooting Common Issues

If you encounter problems while using the MultiBERTs Seed 0 checkpoint, consider the following troubleshooting tips:

- If you receive a model loading error, ensure that your internet connection is stable and the model name is correctly typed.

- If you experience memory issues, consider reducing the size of the input texts or using a machine with more RAM.

- Keep an eye out for warnings regarding bias in predictions. If you notice biased outputs, refer to these discussions from the BERT repository for more insights.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.