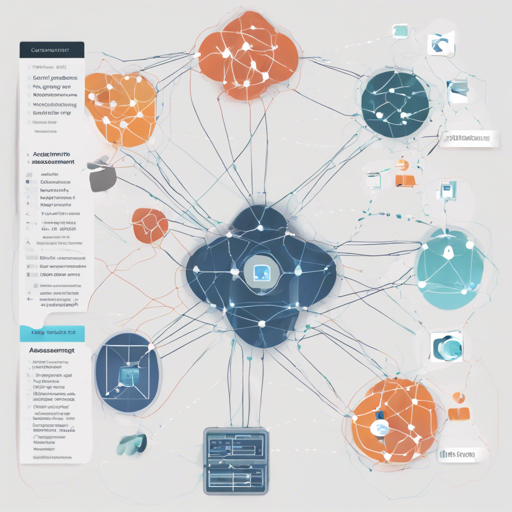

Welcome to the world of Neural Image Assessment (NIMA) using PyTorch. This offering allows you to evaluate images in terms of aesthetics using a trained model. Below is a step-by-step guide to help you navigate through the installation, datasets, and usage of NIMA.

Installing PyTorch NIMA

The installation process can vary depending on the method you prefer. Here are three methods you can use:

- Docker: For a containerized environment, simply run:

docker run -it truskovskiyknima:latest /bin/bashpip install nimagit clone https://github.com/truskovskiyknima.pytorch.git

cd nima.pytorch

virtualenv -p python3.7 env

source .env/bin/activateDataset

The NIMA model is trained on the AVA (Aesthetic Visual Analysis) dataset. You can acquire the dataset from here. Below are examples of images with their scores:

Usage

Once you have completed the installation and dataset preparation, you can start using the command line interface for NIMA:

nima-cliHere are some handy commands you can use:

- get_image_score: Get image scores.

- prepare_dataset: Parse, clean, and split the dataset.

- run_web_api: Start the server for model serving.

- train_model: Train the model with your dataset.

- validate_model: Validate the trained model’s performance.

Troubleshooting Tips

If you encounter any issues during installation or usage, consider the following:

- Ensure Docker is installed and running correctly if you’re using the Docker method.

- Check the Python and pip versions to ensure compatibility with the library.

- Make sure you have access to the AVA dataset and that it’s properly formatted.

- If encountering issues with commands, double-check the syntax.

For more insights, updates, or to collaborate on AI development projects, stay connected with **fxis.ai**.

Previous Versions

The legacy version of this project is still accessible here for those interested in exploring earlier functionalities.

Contributing and License

Contributions are welcome to help enhance the capabilities of NIMA. This project falls under the MIT License.

Acknowledgements

This project wouldn’t be possible without the following resources:

- neural-image-assessment in keras

- Neural-Image-Assessment in pytorch

- pytorch-mobilenet-v2

- origin NIMA article

- origin MobileNetV2 article

- Post at Google Research Blog

- Heroku: Cloud Application Platform

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Understanding the Code: An Analogy

Think of the NIMA model as a qualified art critic. Instead of looking at a painting or photography for a long time, this critic quickly assesses the aesthetic value based on previously trained knowledge (akin to training on a dataset). When you feed the critic an image, it gives you a score (much like how the NIMA model outputs scores) based on its learned experience. You can almost picture your images as canvases, waiting for the critic’s expertise to express their aesthetic value!