If you’re looking to harness the power of the Arabic language in your AI applications, the AraBERTMo model is a fantastic choice. This pre-trained language model is based on Google’s BERT architecture and is specifically tailored for Arabic. In this article, we will guide you through the steps of using this model effectively, including how to load it, and troubleshoot common issues.

Understanding AraBERTMo

AraBERTMo_base is an Arabic pre-trained language model that uses the BERT-Base configuration, making it powerful for various natural language processing tasks. It can comprehend context better due to its Bidirectional Encoder Representations.

Getting Started with AraBERTMo

Before you dive in, ensure you have the necessary libraries installed. You will need torch or tensorflow, along with the Hugging Face transformers library. Follow these steps to set up your environment:

- Install PyTorch or TensorFlow.

- Install the Hugging Face Transformers library.

Loading the Pre-trained Model

Now that you have everything set up, you can load the AraBERTMo model. Here’s how:

from transformers import AutoTokenizer, AutoModelForMaskedLM

tokenizer = AutoTokenizer.from_pretrained("Ebtihal/AraBertMo_base_V8")

model = AutoModelForMaskedLM.from_pretrained("Ebtihal/AraBertMo_base_V8")

A Closer Look at the Code

Think of loading the model as inviting a friend into your home. You first need to prepare your place (installing libraries) and then, when your friend arrives (loading the model), you greet them and tell them what you need (initializing the tokenizer and model). This model, when invited into your programming environment, can understand and help you generate meaningful sentences in Arabic through its pre-trained knowledge.

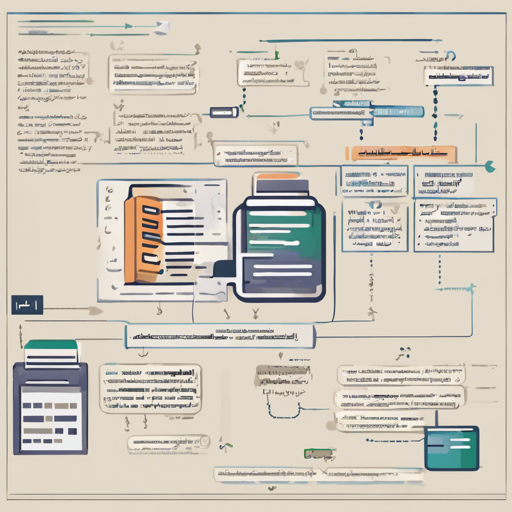

Results of Pretraining

The AraBERTMo_base_V8 model was pre-trained using approximately 3 million words from the Arabic version of the OSCAR dataset. Below are some statistics regarding the training results:

| Task | Num examples | Num Epochs | Batch Size | Steps | Wall Time | Training Loss |

|---|---|---|---|---|---|---|

| Fill-Mask | 40,032 | 8 | 64 | 5,008 | 10h 5m 57s | 7.2164 |

Troubleshooting Common Issues

Sometimes, you may encounter issues while working with the AraBERTMo model. Here are some troubleshooting tips:

- Model Not Found: Ensure that you have spelled the model name correctly.

- Library Imports: Make sure you have imported all required libraries at the beginning of your script.

- Version Compatibility: Check if your PyTorch or TensorFlow version is compatible with the Hugging Face transformers library.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Final Thoughts

With the steps above, you are now equipped to leverage the power of the AraBERTMo Arabic language model. Dive into the delightful world of Arabic NLP, and enhance your AI projects today!