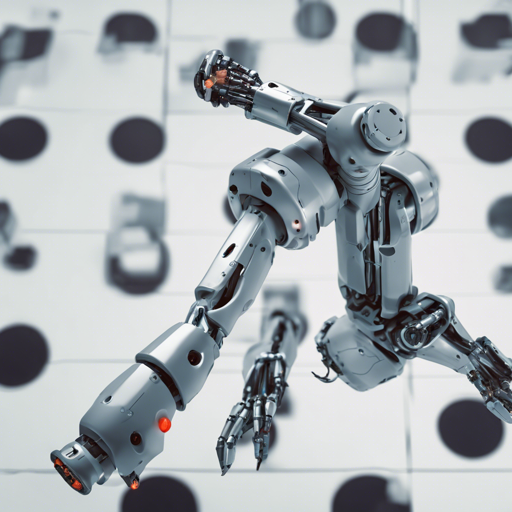

Welcome to this user-friendly guide on utilizing the Deep Reinforcement Learning for robotic pick and place applications using purely visual observations. Whether you’re a novice or someone looking to polish your skills in Python and robotics, this blog has you covered!

Getting Started

This repository contains vital Python classes designed for controlling robotic arms within the MuJoCo environment. You’ll set up a reinforcement learning agent to carry out pick and place tasks using RGB-D (color + depth) images. Let’s explore steps on how you can get started!

Setup Instructions

- Install MuJoCo:

Download and install MuJoCo from this link. Make sure to set up a license and activate it here.

- Clone the Repository:

Run the following command in your terminal to clone the repository:

git clone https://github.com/PaulDanielML/MuJoCo_RL_UR5.git - Navigate to the Directory:

Change into the newly created directory:

cd MuJoCo_RL_UR5 - Install Required Packages:

If desired, activate a virtual environment. Then run:

pip install -r requirements.txtThis command will install all the necessary packages to get the environment running. The first time you execute a script utilizing the *Mujoco_UR5_controller* class, additional setup may occur, which could take some time.

Using the Framework

Once the setup is complete, you can explore the functionalities provided by the classes such as MJ_Controller and GraspEnv to control the robotic arm and train your reinforcement learning agents. Let’s break down their functionalities using an analogy.

Understanding the Classes with an Analogy

Think of your robotic arm as an artist painting a masterpiece. The MJ_Controller acts like the artist’s tools – the brush and color palette. It helps the artist (the robotic arm) make precise movements to achieve the desired picture (tasks).

- move_ee: The brush that helps move to specific XY positions on a canvas, following guidelines like color and thickness (positions and joint limits).

- actuate_joint_group: Imagine the artist varying brush pressure to achieve different strokes; this method allows you to control motor activations at different joints.

- grasp: This is the moment the artist applies paint on the canvas. It checks for success as the painting unfolds.

On the other hand, the GraspEnv can be likened to the gallery where the artist showcases their work. This is where the agent learns to pick and place objects while receiving feedback on its performance in the form of a binary reward system (0 for failure and +1 for success).

Troubleshooting

If you encounter issues during the setup or usage, consider the following troubleshooting steps:

- Ensure that all required packages are installed correctly. Re-run the installation command if you suspect any are missing.

- If your environment isn’t rendering images, make sure that the required graphic libraries are properly installed.

- Restart the MuJoCo simulation if it becomes unresponsive or behaves unexpectedly.

- You can inspect the example files provided, such as

example_agent.pyandGrasping_Agent.py, for guidance on implementation.

For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.

Explore More!

Feel free to dive into the code provided in the repository and experiment with your own adaptations. Happy coding!