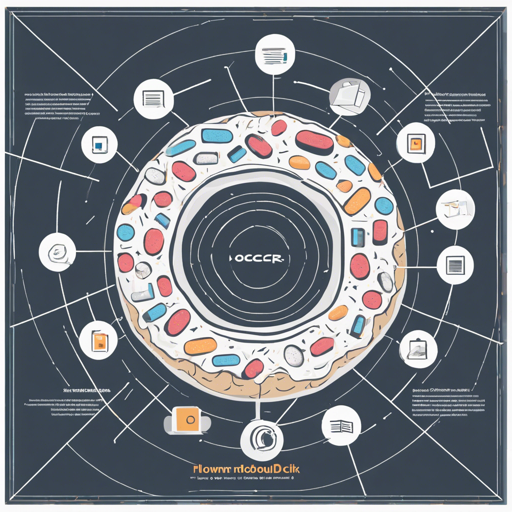

Welcome to the fascinating world of document analysis powered by AI! Today, we’ll explore the Donut model, a fine-tuned transformer that leverages visual and textual data to interpret documents without relying on traditional OCR (Optical Character Recognition). Let’s dive right in!

Understanding the Donut Model

The Donut model is essentially a pair of partners: a vision encoder known as the Swin Transformer and a text decoder called BART. Think of them as a team, where one (the encoder) looks intently at an image, and the other (the decoder) expresses what it sees in words. The process works as follows:

- The vision encoder takes in an image and transforms it into a series of embeddings—a kind of compressed information that captures the essence of the image.

- These embeddings (of shape batch_size, seq_len, hidden_size) are passed on to the text decoder.

- The decoder then generates text in an autoregressive manner, meaning it predicts the next word based on the words it has already generated and the embeddings from the encoder.

This interaction enables the Donut model to transform images into meaningful text without needing traditional OCR techniques!

Getting Started with Donut

To get started using the Donut model, follow these steps:

-

Installation:

First, make sure you have Transformers installed in your environment. You can do this via pip:

pip install transformers -

Loading the Model:

Once installed, you can load the Donut model from Hugging Face’s repository:

from transformers import DonutModel, DonutProcessor processor = DonutProcessor.from_pretrained("huggingface/donut") model = DonutModel.from_pretrained("huggingface/donut") -

Using the Model:

Now you are ready to process your document images!

from PIL import Image # Load your image image = Image.open("path_to_your_image.jpg") # Preprocess the image pixel_values = processor(image, return_tensors="pt").pixel_values # Generate text from the image generated_ids = model.generate(pixel_values) generated_text = processor.decode(generated_ids[0], skip_special_tokens=True) print(generated_text)

Troubleshooting

If you encounter issues while using the Donut model, here are some common troubleshooting tips:

- Ensure you have the latest version of the Transformers library installed.

- If the model fails to recognize text in your document, check the clarity and quality of the input image.

- Review any error messages for specific details about missing dependencies or incorrect usages.

- For any additional help or community support, consider visiting forums or documentation.

- For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Conclusion

With the Donut model, document understanding has never been easier! By leveraging the symbiotic relationship between vision and language, this powerful tool allows for efficient extraction of textual information from images without traditional OCR techniques.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.