The Functionary model, specifically designed to interpret and execute functions and plugins intelligently, is revolutionizing how we interact with AI. In this blog post, we’ll guide you through understanding and implementing the Functionary model effectively.

Understanding Functionary: A Brief Analogy

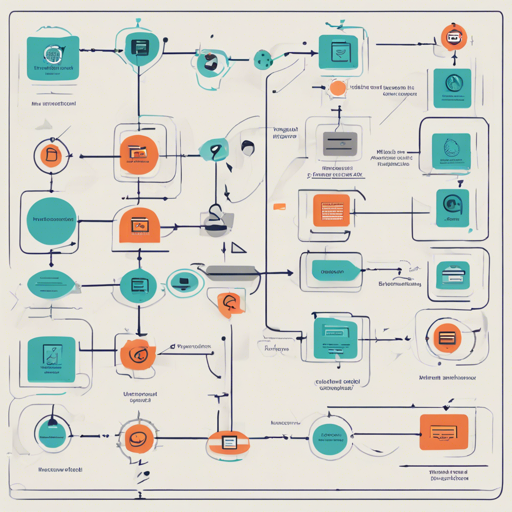

Imagine you are the manager of a highly organized restaurant kitchen where every chef has a specific task. The Functionary model acts like a well-coordinated head chef. It determines which chef (function) to call upon at any moment, whether to work together (in parallel) or one after the other (serially), and it understands the dish (output) that has been prepared. Just like a head chef only summons the necessary chefs when required, the Functionary model triggers functions only when they are needed, ensuring a smooth and efficient operation.

Key Features of Functionary

- Intelligent **parallel tool use**

- Analyzes function/tool outputs to provide relevant and grounded responses

- Determines when to avoid function calls, offering normal chat responses instead

- Represents a robust open-source alternative to GPT-4

Performance Metrics

The Functionary model showcases exceptional capabilities, particularly in Function Calling Accuracy, as evidenced by the metrics below:

Dataset Model Name Function Calling Accuracy (Name Arguments)

:------------- :------------------- ---------------------------:

In-house data MeetKai-functionary-small-v2.2 0.546

In-house data MeetKai-functionary-medium-v2.2 0.664

In-house data OpenAI-gpt-3.5-turbo-1106 0.531

In-house data OpenAI-gpt-4-1106-preview 0.737 Creating and Using Prompts

The v2PromptTemplate is a unique framework utilized to organize conversations effectively. It breaks each turn into three components: from, recipient, and content. The function definitions are translated into a format akin to TypeScript definitions, which are then seamlessly injected into the conversation. Here’s a code snippet illustrating how the model can be run:

from openai import OpenAI

client = OpenAI(base_url=http:localhost:8000v1, api_key=functionary)

client.chat.completions.create(

model=path_to_functionary_model,

messages=[{"role": "user", "content": "What is the weather for Istanbul?"}],

tools=[

{

"type": "function",

"function": {

"name": "get_current_weather",

"description": "Get the current weather",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA"

}

},

"required": ["location"]

}

}

}

],

tool_choice="auto"

) Running the Model

To run the Functionary model, you can utilize the OpenAI-compatible vLLM server, which facilitates seamless interactions. Follow the instructions available on the MeetKai GitHub repository.

Troubleshooting Tips

- If the model isn’t responding as expected, ensure that your function definitions are properly formatted as JSON Schema objects.

- Verify your network connection to ensure the server is reachable.

- Check for any errors in the API key and base URL specified in your code snippet.

- For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.