Welcome to the exciting world of AI-powered image-to-text models! This guide will walk you through the process of utilizing the LLaVA-Llama-3-8B-v1.1 model, fine-tuned from advanced architectures to enhance image understanding and conversation. Whether you’re looking to download the model, chat with it, or reproduce results, we have you covered!

Understanding the Model

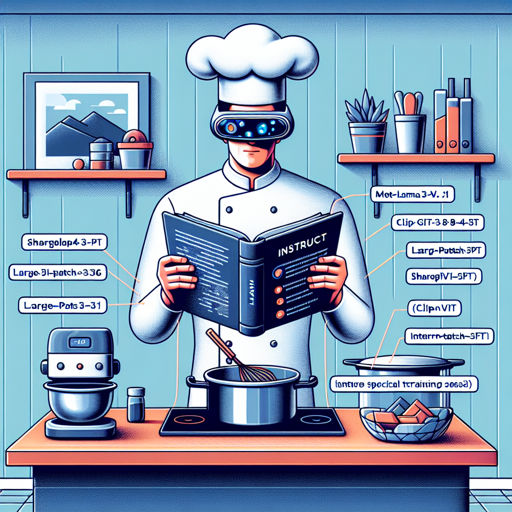

The LLaVA-Llama-3-8B-v1.1 is like a skilled chef who has perfected a recipe by combining ingredients from various culinary traditions. Here’s how it works:

- **Meta-Llama-3-8B-Instruct**: Think of this as a rich cookbook providing foundational recipes (or instructions) for the chef (the model).

- **CLIP-ViT-Large-patch14-336**: This acts as visual goggles, allowing our chef to see and interpret images beautifully.

- **ShareGPT4V-PT and InternVL-SFT**: These are the chef’s special training sessions to improve skills further, getting better at transforming visual inputs into insightful outputs.

By blending these components together, the LLaVA-Llama-3-8B-v1.1 model can effectively interpret images and produce detailed textual descriptions.

Getting Started with the Model

Ready to dive in? Here’s a quick start to download and chat with the model.

1. Downloading the Models

Use the following commands in your terminal to download the necessary model files:

# mmproj

wget https://huggingface.co/xtuner/llava-llama-3-8b-v1_1-gguf/resolve/main/llava-llama-3-8b-v1_1-mmproj-f16.gguf

# fp16 llm

wget https://huggingface.co/xtuner/llava-llama-3-8b-v1_1-gguf/resolve/main/llava-llama-3-8b-v1_1-f16.gguf

# int4 llm

wget https://huggingface.co/xtuner/llava-llama-3-8b-v1_1-gguf/resolve/main/llava-llama-3-8b-v1_1-int4.gguf

# (optional) ollama fp16 model file

wget https://huggingface.co/xtuner/llava-llama-3-8b-v1_1-gguf/resolve/main/OLLAMA_MODELFILE_F16

# (optional) ollama int4 model file

wget https://huggingface.co/xtuner/llava-llama-3-8b-v1_1-gguf/resolve/main/OLLAMA_MODELFILE_INT42. Chatting with the Model

Once downloaded, you can interact with the model using Ollama. Here’s how to create a chat session:

# fp16

ollama create llava-llama3-f16 -f ./OLLAMA_MODELFILE_F16

ollama run llava-llama3-f16 "xx.png Describe this image"

# int4

ollama create llava-llama3-int4 -f ./OLLAMA_MODELFILE_INT4

ollama run llava-llama3-int4 "xx.png Describe this image"Troubleshooting Common Issues

If you encounter issues during setup or execution, consider the following troubleshooting tips:

- Ensure you have a stable internet connection when downloading models.

- Check for any syntax errors in command lines you have entered.

- If the model fails to load, verify that all necessary files are downloaded correctly.

- If you require further assistance, feel free to reach out and discuss your issue with us. For more insights, updates, or to collaborate on AI development projects, stay connected with fxis.ai.

Reproducing Results

To reproduce the results with this model, refer to the documentation available on GitHub. Adhering to the outlined strategies will ensure you achieve similar performance metrics.

Conclusion

In the world of AI, advancements like the LLaVA-Llama-3-8B model herald a new era of image understanding. Whether you’re a researcher or a hobbyist, this tool opens new doors to explore the interdisciplinary nature of text and visuals.

At fxis.ai, we believe that such advancements are crucial for the future of AI, as they enable more comprehensive and effective solutions. Our team is continually exploring new methodologies to push the envelope in artificial intelligence, ensuring that our clients benefit from the latest technological innovations.